Maspack Reference Manual

Last update: September, 2021

Contents

- Preface

- 1 Properties

-

2 Rendering

- 2.1 Overview

- 2.2 Viewers

-

2.3 The Renderer Interface

- 2.3.1 Drawing single points and lines

- 2.3.2 Drawing single triangles

- 2.3.3 Colors and associated attributes

- 2.3.4 Drawing using draw mode

- 2.3.5 Drawing solid shapes

- 2.3.6 Shading and color mixing

- 2.3.7 Vertex coloring, and color mixing and interpolation

- 2.3.8 Changing the model matrix

- 2.3.9 Render properties and RenderProps

- 2.3.10 Screen information and 2D rendering

- 2.3.11 Depth offsets

- 2.3.12 Maintaining the graphics state

- 2.3.13 Text rendering

- 2.4 Render Objects

- 2.5 Texture mapping

- 2.6 Mesh Renderers

- 2.7 Object Selection

Preface

Maspack (modeling and simulation package) is a set of Java packages to support physical modeling and simulation. The purpose of this guide is to document some of these packages in detail, one package per chapter. At present, the guide is incomplete and documents only the property and rendering packages. More chapters will be added as resources permit.

Chapter 1 Properties

The maspack property package (maspack.properties) provides a uniform means by which classes can export specific attributes and information about them to application software. The main purpose of properties is to

-

1.

Provide generic code for accessing and modifying attributes.

-

2.

Remove the need for "boiler-plate" code to read or write attributes from persistent storage, or modify them by other means such as a GUI panel.

The property software uses Java reflection to obtain information about a property’s value and its associated class, in a manner similar to that used by the properties of Java Beans.

1.1 Accessing Properties

Any class can export properties by implementing the interface HasProperties:

Each property is associated with a name, which must be a valid Java identifier. The getProperty() method returns a Property handle to the named property, which can in turn be used to access that property’s values or obtain other information about it. getAllPropertyInfo() returns a PropertyInfoList providing information about all the properties associated with the class.

A Property handle supplies the following methods:

- get()

-

Returns the property’s value. As a rule, returned returned values should be treated as read-only.

- set()

-

Sets the property’s value (unless it is read-only, see Section 1.2).

- getRange()

-

Returns a Range object for a property (see Section 1.1.1), which is used to determine which values are admissible to set. If all values are admissible, getRange() can return null.

- getHost()

-

Returns the object to which this property handle belongs.

- getInfo()

-

Returns static information about this property (see Section 1.2).

A simple example of property usage is given below. Assume that we have a class called DeformableModel which contains a property called stiffness, and we want to set the stiffness to 1000. This could be done as follows:

Of course, DeformableModel will likely have a method called setStiffness that can be used to set the stiffness directly, without having to got through the Property interface. However, the purpose of properties is not to facilitate attribute access within specially constructed code; it is to facilitate attribute access within generic code that is hidden from the user. For instance, suppose I want to query a property value from a GUI. The GUI must obtain the name of the desired property from the user (e.g., through a menu or a text box), and then given only that name, it must go and obtain the necessary information from the object exporting that property. A Property allows this to be done in a manner independent of the nature of the property itself.

1.1.1 Why Property Handles?

In theory, one could embed the methods of Property directly within the HasProperties interface, using methods with signatures like

The main reason for not doing this is performance: a property handle can access the attribute quickly, without having to resolve the property’s name each time.

Each property handle contains a back-pointer to the object containing, or hosting, the property, which can be obtained with the getHost() method.

1.2 Property Ranges

A Range object supplies information about what values a particular Property can be set to. It contains the following methods:

- isValid()

-

Returns true if obj is a valid argument to the property’s set method. The optional argument errMsg, if not null, is used to return an error message in case the object is not valid.

- makeValid()

-

Trys to turn obj into a valid argument for set(). If obj is a valid argument, then it is returned directly. Otherwise, the method tries to return an object close to obj that is in the valid range. If this is not possible, the method returns Range.IllegalValue.

- intersect()

-

Intersects the current range with another range and placed the result in this range. The resulting range should admit values that were admissible by both previous ranges.

- isEmpty()

-

Returns true if this range has no admissible values. This is most likely to occur as the result of an intersection operation.

Possible usage of a range object is shown below:

Two common examples of Range objects are DoubleInterval and IntegerInterval, which implement intervals of double and integer values, respectively. Ranges are also Clonable, which means that they can be duplicated by calling range.clone().

1.3 Obtaining Property Information

Additional information about a property is available through the PropertyInfo interface, which can be obtained using the getInfo() method of the property handle. Information supplied by PropertyInfo is static with respect to the exporting class and does not change (unlike the property values themselves, which do change). Such information includes the property’s name, whether or not it is read-only, and a comment describing what the property does.

Some of the PropertyInfo methods include:

Property information can also be obtained directly from the exporting class, using getAllPropertyInfo(), which returns information for all the properties exported by that class. This information is contained within a PropertyInfoList:

For example, suppose we want to print the names of all the properties associated with a given class. This could be done as follows:

1.4 Exporting Properties from a Class

As indicated above, a class can export properties by implementing the interface HasProperties, along with the supporting interfaces Property, PropertyInfo, and PropertyInfoList. The class developer can do this in any way desired, but support is provided to make this fairly easy.

The standard approach is to create a static instance of PropertyList for the exporting class, and then populate it with PropertyInfo structures for the various exported properties. This PropertyList (which implements PropertyInfoList) can then be used to implement the getProperty() and getAllPropertyInfo() methods required by HasProperties:

Information about specific properties should be added to PropertyList within a static code block (second line in the above fragment). This can be done directly using the method

but this requires creating and initializing a PropertyInfo object. An easier way is to use a different version of the add method, which creates the required PropertyInfo structure based on information supplied through its arguments. In the example below, we have a class called ThisHost which exports three properties called visible, lineWidth, and color:

The values for the three properties are stored in the fields myLineWidth, myVisibleP, and myColor. Default values for these are defined by static fields.

A static instance of a PropertyList is created, using a constructor which takes the exporting class as an argument (in Java 1.5, the class object for a class can be referenced as ClassName.class). Information for each property is then added within a static block, using the convenience method

The first argument, nameAndMethods, is a string which gives the name of the property, optionally followed by whitespace-separated names of the accessor methods for the property’s value:

These accessor methods should have the signatures

If any of the methods are not specified, or are specified by a ’*’ character, then the system with look for accessor methods with the names getXxx, setXxx, and getXxxRange, where xxx is the name of the property. If no getRangeMethod is defined (and no numeric range is specfied in the options argument string, as described below), then the property will be assumed to have no range limitations and its getRange() method will return null.

The second argument, description, gives a textual description of the property, and is used for generating help messages or tool-tip text.

The third argument, defaultValue, is a default property value, which is used for automatic initialization and for deciding whether the property’s value needs to be written explicitly to persistent storage.

An extended version of the add method takes an additional argument options:

The options argument is a sequence of option tokens specifing various property attributes, each of which can be queried using an associated PropertyInfo method. Token are separated by white space and may appear in any order. Some have have both long and abbreviated forms. They include:

- NW, NoAutoWrite

-

Disables this property from being automatically written using the PropertyList methods write and writeNonDefaults (Section 1.5). Causes the PropertyInfo method getAutoWrite() to return false.

- AW, AutoWrite (Default setting)

-

Enables this property to be automatically written using the PropertyList methods write and writeNonDefaults (Section 1.5). Causes the PropertyInfo method getAutoWrite() to return true.

- NE, NeverEdit

-

Disables this property from being interactively edited. Causes the PropertyInfo method getEditing() to return Edit.Never.

- AE, AlwaysEdit (Default setting)

-

Enables this property to be interactively edited. Causes the PropertyInfo method getEditing() to return Edit.Always.

- 1E, SingleEdit

-

Enables this property to be interactively edited for one property host at a time. Causes the PropertyInfo method getEditing() to return Edit.Single.

- XE, ExpandedEdit

-

Indicates, where appropriate, that the widget for editing this property can be expanded or contracted to conserve GUI space, and that it is initially expanded. Causes the PropertyInfo method getWidgetExpandState() to return ExpandState.Expanded. This is generally relevant only for properties such as CompositeProperties (Section 1.4.2) whose editing widgets have several sub-widgets.

- CE, ContractedEdit

-

Indicates, where appropriate, that the widget for editing this property can be expanded or contracted to conserve GUI space, and that it is initially contracted. Causes the PropertyInfo method getWidgetExpandState() to return ExpandState.Contracted. This is generally relevant only for properties such as CompositeProperties (Section 1.4.2) whose editing widgets have several sub-widgets.

- NS, NoSlider

-

Indicates that a slider should not be allowed in the widget for editing this property. Causes the PropertyInfo method isSliderAllowed() to return false. In order for the editing widget to contain a slider, the property must also have both a numeric value and a defined range.

- DX, DimensionX

-

Sets the numeric dimension of this property to X. The dimension can be queried using the PropertyInfo method getDimension(). For properties which are non-numeric or do not have a fixed dimension, the dimension will be returned as -1. Note the for some numeric properties, the dimension can be determined automatically and there is no need to explicitly specify this attribute.

- SH, Sharable

-

Indicates that the property value is not copied internally by the host and can therefore be shared among several hosts. This may improve memory efficiency but means that changes to the value itself may be reflected among several hosts. This attribute can be queried by the PropertyInfo method isSharable().

- NV, NullOK

-

Indicates that the property value may be null. By default, this is false, unless the default value has been specified as null. Whether or not a property may be set to null is particularly relevant in the case of CompositeProperties (Section 1.4.2), where one may choose between setting individual subproperties or setting the entrie structure to null altogether.

- %fmt

-

A printf-style format string, beginning with %, used to format numeric information for this property’s value, either in a GUI or when writing to persistent storage. A good general purpose format string to use is often "%.6g", which specifies a free format with six significant characters.

- [l,u]

-

A numeric range interval with a lower bound of l and an upper bound of u. If specified, this defines the value returned by PropertyInfo.getDefaultNumericRange(); otherwise, that method returns null. If a getRangeMethod is not defined for the property, and the property has a numeric type, then the default numeric range is returned by the property’s Property.getRange() method. The default numeric range is also used to determine bounds on slider widgets for manipulating the property’s value, in case the upper or lower limits returned by the Property.getRange() method are unbounded. The symbol inf can be used in an interval range, so that [0,inf] represents the set of non-negative numbers.

The following code fragment shows an example of using the option argument:

The property named radius is given a numeric format string of

"%8.3f", a numeric range in the interval ![]() , and set so

that it will not be displayed in an automatically created GUI panel.

, and set so

that it will not be displayed in an automatically created GUI panel.

1.4.1 Read-only properties

A property can be read-only, which means that it can be read but not set. In particular, the set() for a read-only Property handle is inoperative.

Read-only properties can be specified using the following PropertyList methods:

These are identical to the add methods described above, except that the nameAndMethod argument includes at most a get accessor, and there is no argument for specifying a default value.

The method getAutoWrite() also returns false for read-only properties (since it does not make sense to store them in persistent storage).

1.4.2 Inheriting Properties from a superclass

By default, a subclass of a HasProperties class inherits all the properties exported by the class exports all the properties exported by it’s immediate superclass.

Alternatively, a subclass can create its own properties by creating it’s own PropertyList, as in the code example of Section 1.3:

and none of the properties from the superclass will be exported. Note that it is necessary to redefine getAllPropertyInfo() so that the instance of myProps specific to ThisHost will be returned.

If one wishes to also export properties from the superclass (or some other ancestor class), then a PropertyList can be created which also contains property information from the desired ancestor class. This involves using a different constructor, which takes a second argument specifying the ancestor class from which to copy properties:

All properties exported by Ancestor will now also be exported by ThisHost.

What if we want only some properties from an ancestor class? In that case, we can edit the PropertyList to remove properties we don’t want. We can also replace properties with new ones with the same name but possibly different attributes. The latter may be necessary if the class type of a property’s value changes in the sub-class:

1.5 Composite Properties

A property’s value may itself be an object which exports properties; such an object is known as a composite property, and its properties are called subproperties.

Property handles for subproperties may be obtained from the top-level exporting class using getProperty(), with successive sub-property names delimited by a ’.’ character. For example, if a class exports a property textureProps, whose value is a composite property exporting a sub-property called mode, then a handle to the mode property can be obtained from the top-level class using

which has the same effect as

Composite properties should adhere to a couple of rules. First, they should be returned by reference; i.e., the hosting class should return a pointer to the original property, rather than a copy. Secondly, they should implement the CompositeProperty interface. This is an extension of HasProperties with the following methods:

These methods can be easily implemented using local variables to store the relevant information, as in

and similarly for the property information.

The purpose of the CompositeProperty interface is to allow traversal of the composite property tree by the property support code.

The accessor method that sets a composite property within a host should set it’s host and property information. This can be done using using the setPropertyHost and setPropertyInfo methods, as in the following example for a compound property of type TextureProps:

Alternatively, the same thing can be done using the static convenience method PropertyUtils.updateCompositeProperty:

If a composite property has a number of subclasses, it may optionally implement the static method

which returns an array of the class types of these subclasses. This can then be used by the system to automatically create GUI widgets that allow different instances of these subclasses to be selected.

1.6 Reading and Writing to Persistent Storage

Properties contain built-in support that make it easy to write and read their values to and from persistent storage.

First, PropertyInfo contains the methods

which allow an individual object value to written to a PrintStream or scanned from a ReaderTokenizer.

Second, if the host object maintains a PropertyList, it can use the convenience method

to write out values for all properties for which getAutoWrite() returns true. Properties will be written in the form

where value is the output from the writeValue method of the PropertyInfo structure.

To economize on file space, there is another method which only writes out property values when those values differ from the property’s default value:

Again, values are written only for the properties for which getAutoWrite() returns true. The method returns false if not property values are written.

To read in property values, their are the methods

where the former will inspect the input stream and scan in any recognized property of the form propertyName = value (returning true if such a property was found), while the latter will check the input for a property with a specific name (and return true if the specified property was found).

1.7 Inheritable Properties

Suppose we have a hierarchical arrangement of property-exporting objects, each exporting an identical property called stiffness whose value is a double (properties are considered identical if they have the same name and the same value type). It might then be desirable to have stiffness values propagate down to lower nodes in the hierarchy. For example, a higher level node might be a finite element model, with lower nodes corresponding to individual elements, and when we set stiffness in the model node, we would like it to propagate to all element nodes for which stiffness is not explicitly set. To implement this, each instance of stiffness is associated with a mode, which may be either explicit or inherited. When the mode is inherited, stiffness obtains its value from the first ancestor object with a stiffness property whose mode is explicit.

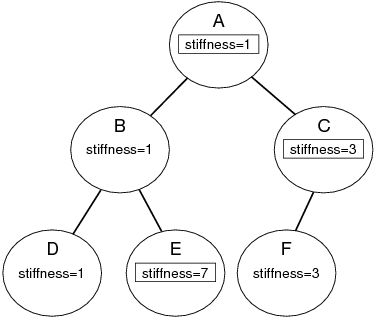

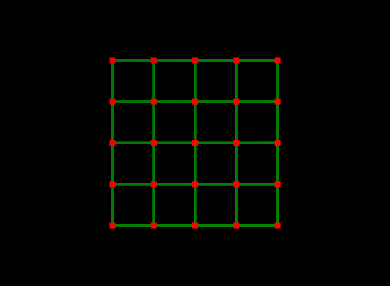

This is an example of property inheritance, as illustrated by Figure 1.1. Stiffness is explicitly set in the top node (A), and its value of 1 propagates down to nodes B and D whose stiffness mode is inherited. For node C, stiffness is also explicitly set, and its value of 4 propagate down to node F.

Another common use of property inheritance is in setting render properties: we might like some properties, such as color, to propagate down to descendant nodes for which a color has not been explicitly set.

To state things more generally: any property which can be inherited is called an inheritable property, and is associated with a mode whose value is either explicit or inherited. The basic operating principle of property inheritance is this:

Important:

An inherited property’s value should equal that of the nearest matching ancestor property which is explicitly set.

Other behaviors include:

-

•

Setting a property’s value (using either the set accessor in the host or the set method of the Property handle) will cause its mode to be set to explicit.

-

•

A property’s mode can be set directly. When set to explicit, all descendant nodes in the hierarchy are updated with the property’s value. When set to inherited, the property’s value is reset from the first explicit value in the ancestry, and then propagated to the descendants.

-

•

When a new node is added to the hierarchy, all inherited properties within the node are updated from the ancestry, and then propagated to the descendants.

If a property is inheritable, then the isInherited() method in its PropertyInfo structure will return true, and it’s property handle will be an instance of InheritableProperty:

Valid modes are PropertyMode.Explicit, PropertyMode.Inherited, and PropertyMode.Inactive. The latter is similar to Inherited, except that setting an Inactive property’s value will not cause its mode to be set to Explicit and its new value will not be propagated to hierarchy descendants.

The hierarchy structure which we have been describing is implemented by having host classes which correspond to hierarchy nodes implement the HierarchyNode interface.

These methods should be implemented as wrappers to the underlying hierarchy implementation.

1.8 Exporting Inheritable Properties

The property package provides most of the code required to make inheritance work, and so all that is required to implement an inheritable property is to provide some simple template code within its exporting class. We will illustrate this with an example.

Suppose we have a property called “width” that is to be made inheritable. Then addition to it’s value variable and set/get accessors, the host class should provide a PropertyMode variable along with set/get accessors:

The call to PropertyUtils.setModeAndUpdate() inside the set method ensures that inherited values within the hierarchy are properly whenever the mode is changed. If the mode is set to PropertyMode.Explicit, then the property’s value needs to be propagated to any descendent nodes for which it is inherited. If the mode is set to PropertyMode.Inherited, then the property’s value needs to be obtained from the ancestor nodes, and then also propagated to any descendent nodes for which it is inherited.

As mentioned in the previous section, explicitly setting a property’s value using the set accessor should cause it’s property mode to be set to Explicit and the new value to be propagated to hierarchy descendents. This can be accomplished by using PropertyUtils.propagateValue within the set accessor:

The actual creation of an inherited property can be done using the PropertyList methods

instead of the add or addReadOnly methods. The nameAndMethods argument may now specify up to five method names, corresponding, in order, to the get/set accessors for the property value, the getRange accessor, and the get/set accessors for the property’s mode. If any of these are omitted or specified as ’*’, then the system searches for names of the form getXxx, setXxx, getXxxRange, getXxxNode, and setXxxMode, where xxx is the property name.

Finally, the host objects which actually correspond to hierarchy nodes must implement the HierarchyNode interface as described in the previous section, and any routine which adds a node to the hierarchy must also implement the following code fragment:

This ensures that when a node is added, all property values within and beneath it are made consistent with the inheritance hierarchy.

1.9 Inheritable and Composite Properties

Property inheritance is not currently implemented for CompositeProperty objects, in order to avoid confusion of the inheritance rules. Suppose a class exports a composite property A, which in turn exports an inheritable property B. Now suppose that A is an inheritable property with its mode is set to Inherited. Then the entire structure of A, including the value of B and its mode, is inherited, and it is no longer possible to independently set the value of B, even if its mode is Explicit.

However, the leaf nodes of a composite property tree certainly can be inherited. Suppose a class ThisHost exports properties width, order, and renderProps, and that the latter is a composite property exporting width, color, and size. The leafs nodes of the composite property tree exported by ThisHost are the properties

Each of these may be inheritable, although renderProps may not be.

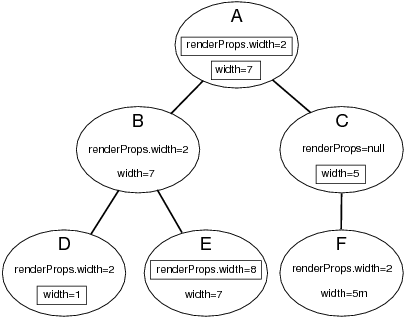

It should be noted that all the leaves in a composite property tree are considered to be unique properties and do not affect each other with respect to inheritance, even if some of the subcomponent names are the same. For instance, in the above example, the properties width and renderProps.width are different; each may inherit, respectively, from occurrences of width and renderProps.width contained in ancestor nodes, but they do not affect each other. This is illustrated by Figure 1.2.

Also, if a CompositeProperty is set to null within a particular node, then the inheritance of its subproperties passes straight through that node as though the property was not defined there at all. For example, in Figure 1.2, renderProps is set to null in node C, and so renderProps.width in node F inherits its value directly from node A.

Composite property inheritance is fully supported if an inheritable property’s set accessor invokes PropertyUtils.updateCompositeProperty as shown in the code example at the end of Section 1.4.2.

Chapter 2 Rendering

2.1 Overview

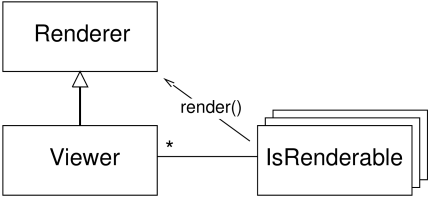

This chapter describes the maspack rendering package (maspack.render), which provides a general interface for the graphical rendering of objects and allows them to implement their own rendering methods. An object makes itself renderable by implementing the IsRenderable interface, and renderable objects can then be displayed by a Viewer, which typically provides features such as viewpoint control, lighting arrangements, fixtures such as coordinate axes, grids and clipping planes, and component selection. The viewer also implements a Renderer interface which provides the actual graphics functionality which renderable objects use to draw themselves.

2.1.1 Renderables and Viewers

Any object to be rendered should implement the IsRenderable interface, which defines the following four methods,

prerender() is called prior to rendering and allows the object to update internal rendering information and possibly give the viewer additional objects to render by placing them on the RenderList (Section 2.2.2). render() is the main method by which an object renders itself, using the functionality provided by the supplied Renderer. updateBounds() provides bounds information for the renderable’s spatial extent (which the viewer can use to auto-size the rendering volume); Vector3d describes a 3-vector and is defined in the package maspack.matrix. getRenderHints() returns flags giving additional information about rendering requirements, including whether the renderable is transparent (IsRenderable.TRANSPARENT) or two dimensional (IsRenderable.TWO_DIMENSIONAL).

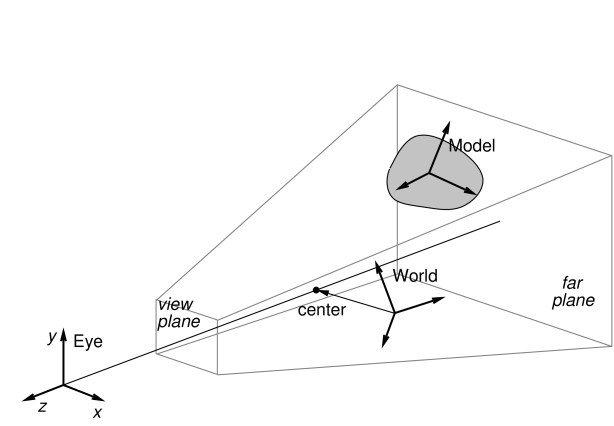

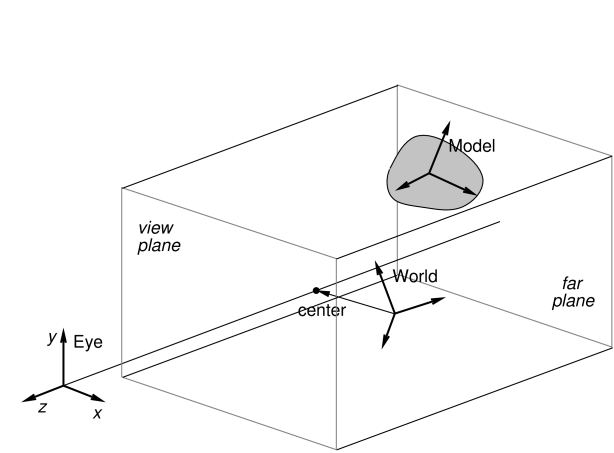

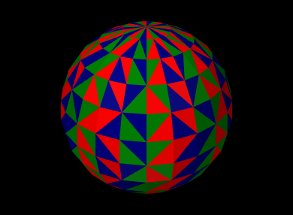

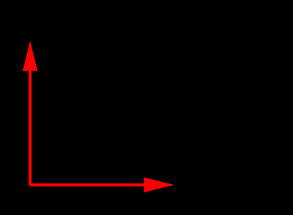

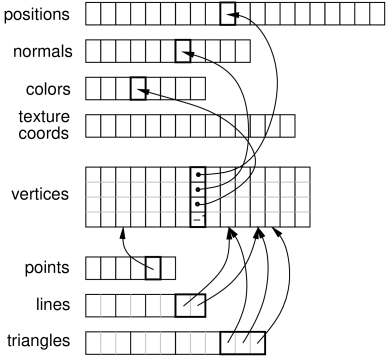

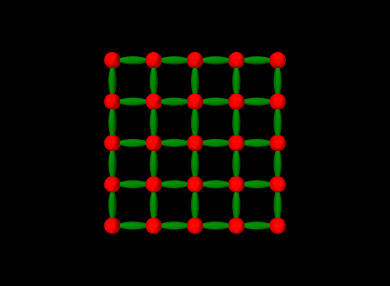

A Viewer provides the machinery needed to display renderable objects, and implements the Renderer interface (Section 2.3) with which renderables draw themselves within their render() methods. Renderer includes methods for maintaining graphics state, drawing primitives such as points, lines, and triangles, and drawing simple solid shapes. The general relationship between viewers, renderables, and renderers is shown in Figure 2.1. Rendering is triggered within a viewer by calling its rerender() method, which causes the prerender() and render() methods to be called for every renderable, as discussed in detail in Section 2.2.

2.1.2 A Complete Example

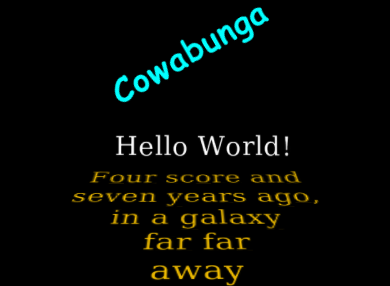

Listing 1 shows a complete example of a renderable object being declared and displayed in a viewer.

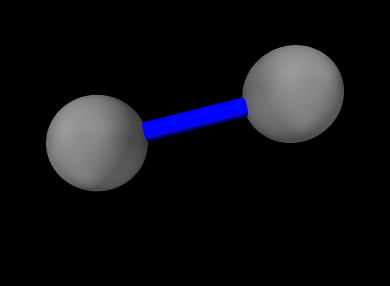

The example creates a class called RenderableExample which

implements isRenderable and the associated methods: prerender(), which does nothing; render(), which draws two gray

spheres connected by a blue cylinder; updateBounds(), which sets

the maximum and minimum bounds to be the points ![]() and

and ![]() , and getRenderHints() which returns no flags. The example

then defines a main() method which creates an instance of RenderableExample, along with a GLViewerFrame which contains a

viewer with which to display it. The renderable is added to the

viewer, the viewpoint is set so that the

, and getRenderHints() which returns no flags. The example

then defines a main() method which creates an instance of RenderableExample, along with a GLViewerFrame which contains a

viewer with which to display it. The renderable is added to the

viewer, the viewpoint is set so that the ![]() and

and ![]() axes of the

viewing plane are aligned with the world

axes of the

viewing plane are aligned with the world ![]() and

and ![]() axes, and the

frame is set to be visible, with the result shown in Figure

2.2.

axes, and the

frame is set to be visible, with the result shown in Figure

2.2.

2.2 Viewers

This section summarizes viewer functionality as defined by the Viewer interface. Note that specific viewer implementations may provide significant additional functionality, such as interactive view control, keyboard and mouse event handling, or graphical fixtures such as coordinate axes, grids or transformers. The description of these extra features is beyond the scope of this document.

Rendering is triggered within a viewer by calling its rerender() method, which then initiates two rendering steps:

-

1.

prerendering: The viewer calls the prerender() method for all its renderables, and adds those which are visible into a render list;

-

2.

repainting: All components in the render list are redrawn using their render() method;

Another viewer method, repaint(), can subsequently be called to initiate repainting without invoking prerendering.

In general, rerender() should be called whenever there is a change in the graphical state of the renderables. This includes changes in geometry, color, or visibility. In the context of simulation, rerender() should be called after every simulation step.

Otherwise, repaint() should be called when the graphical state of the scene has not changed but the final screen display has, such as when the viewpoint is changed, or the display window is unhidden or resized. This is more efficient than calling rerender() because it avoids the overhead of the prerendering step.

The prerendering step is invoked directly within the rerender() method, whereas the repainting step is called in whatever thread implements the graphical display. Since, as described below, one of the functions of the prerendering step is to cache rendering information, rerender() should be called in synchronization with both the graphical display thread and whatever thread(s) (such as a simulation thread) might be altering the state of the renderables.

2.2.1 Render lists

A render list is implemented by the class RenderList and sorts renderable components into separate sublists depending on whether they are primarily opaque, transparent, 2d opaque, and 2d transparent. These designations are determined by examining the flags returned by the renderable’s getRenderHints() method, with TWO_DIMENSIONAL indicating a two dimensional component and TRANSPARENT indicating a transparent one. These sublists assist the viewer in rendering a scene more realistically. For example, in OpenGL, better results are obtained if opaque objects are drawn before transparent ones, and two dimensional objects are drawn after three dimensional ones, with the depth buffer disabled.

A viewer maintains its own internal render list, and rebuilds it once during every prerendering step, using the following algorithm:

The list’s addIfVisible() method calls the component’s prerender() method, and then adds it to the appropriate sublist if it is visible:

A renderable’s visibility is determined as follows:

-

•

Any object implementing IsRenderable is visible by default.

-

•

If the object also implements HasRenderProps (as described in Section 2.3.9), then it is visible only if the RenderProps returned by getRenderProps() is non-null and the associated visible property is true.

As discussed in Section 2.2.3, a viewer can also be provided with an external render list, which is maintained by the application. It is the responsbility of the application, and not the viewer, to rebuild the external render list during the prerendering step. However, in the repainting step, the viewer will handle the redrawing of all the components in both its internal and external render lists.

2.2.2 Prerendering and Rendering

Prerendering allows renderables to

-

1.

update data structures anc cached data needed for rendering

-

2.

add additional renderables to the render list.

The caching of graphical state is typically necessary when rendering is performed in a thread separate from the main application, which can otherwise cause synchronization and consistency problems for renderables which are changing dynamically. For example, suppose we are simulating the motion of two objects, A and B, and we wish to render their positions at a particular time t. If the render thread is allowed to run in parallel with the thread computing the simulation, then A and B might be drawn with positions corresponding to different times (or worse, positions which are indeterminate!).

Synchronizing the rendering and simulation threads will aleviate this problem, but that means foregoing the speed improvement of allowing the rendering to run in parallel. Instead, renderables can use the prerender() method to cache their current state for later use in the render() method, in a manner analagous to double buffering. For example, suppose a renderable describes the position of a point in space, inside a member variable called myPos. Then its prerender() method can be constructed to save this into another another member variable myRenderPos for later use in render():

In the example above, the cached value is stored using floating point values, since this saves space and usually provides sufficient precision for rendering purposes.

As described in Section 2.4, objects can sometimes make use of render objects when rendering themselves. These can result in improved graphical efficieny, and also provide an alternate means for caching graphical information. If render objects are being used, it is recommmended that they be created or updated within prerender().

The prerender() method can also be used to add additional renderables to the render list. This is done by recursively calling the list’s addIfVisible() method. For example, if a renderable has two subcomponents, A and B, which also need to be rendered, then it can add them to the render list as follows:

In addition to adding A and B to the render list if they are visible, addIfVisible() will also call their prerender() methods, which will in turn give them the opportunity to add their own sub components to the render list. Note that prerender() is always called for the specified renderable, whether it is visible (and added to the list) or not (since even if a renderable is not visible, it might have subcomponents which are). This allows an entire hierarchy of renderables to be rendered by simply adding the root renderable to the viewer.

Note that any renderables added to the render list within prerender() are not added to the primary list of renderables maintained by the viewer.

Because of the functionality outlined above, calls to prerender() methods, and the viewer rerender() method that invokes them, should be synchronized with both the graphical display thread and whatever thread(s) might be altering the state of the renderables.

As indicated above, actual object rendering is done within its render() method, which is called during the repaint step, within whatever thread is responsible for graphical display. The render() method signature,

provides a Renderer interface (Section 2.3) which the object uses to draws itself, along with a flags argument that specifies additional rendering conditions. Currently only one flag is formally supported, Renderer.HIGHLIGHT, which requests that the object be rendered using highlighting (see Section 2.3.3.1).

2.2.3 Viewer renderables and external render lists

Renderables can be added or removed from a viewer using the methods

If renderables are arranged in a hierarchy, and add their own subcomponents to the render list during prerender(), as described in Section 2.2.2, then it may only be necessary to add top level renderable components to the viewer.

It is also possible to assign a viewer an external render list, for which the prerendering step is maintained by the application. This is useful in situations where multiple viewers are being used to simultaneously render a common set of renderables. In such cases, it would be wasteful for each viewer to repeatedly execute the prerender phase on the same renderable set. It may also lead to inconsistent results, if the state of renderables changes between different viewers’ invocation of the prerender phase.

To avoid this problem, an application may create its own render list and then give it to multiple viewers using setExternalRenderList(). A code sample for this is as follows:

The statement synchronize(extlist) ensures that calls to extlist.addIfVisible(r) (and the subsequent internal calls to prerender()) are synchronized with render() method calls made by the viewer. This works because the viewer also wraps its usage of extlist inside synchronize(extlist) statements.

Once the viewers have been assigned an external render list, they will handle the repainting step for its renderables, along with their own internal renderables, every time repainting is invoked through a call to either rerender() (as in the above example) or repaint().

2.2.4 Coordinate frames and view point control

A viewer maintains three primary coordinate frames for describing the relative locations and orientations of scene objects and the observing “eye” (or camera). These are the eye, model, and world frames.

The eye frame (sometimes also known as the camera frame) is a

right-handed frame located at the eye (or camera) focal point, with

the ![]() axis pointing toward the observer. The viewing frustum

is located in the half space associated with the negative

axis pointing toward the observer. The viewing frustum

is located in the half space associated with the negative ![]() axis of

the eye frame (and usually centered on said axis), with the near and

far clipping planes designated as the view plane and far

plane, respectively. The viewer also maintains a viewing center, typically located along the negative

axis of

the eye frame (and usually centered on said axis), with the near and

far clipping planes designated as the view plane and far

plane, respectively. The viewer also maintains a viewing center, typically located along the negative ![]() axis, and which

defines the point about which the camera pivots in response to

interactive view rotation.

axis, and which

defines the point about which the camera pivots in response to

interactive view rotation.

The viewing frustum is defined by the view and far planes, in combination with a projection matrix that transforms eye coordinates into clip coordinates. Most commonly, the projection matrix is set up for perspective viewing (Figure 2.3), but orthographic viewing (Figure 2.4) is sometimes used as well.

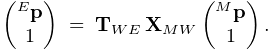

The model frame is the coordinate frame in which geometric

information for rendered objects is specified, and the world frame is the

base with respect to which the model and eye frames are defined. The

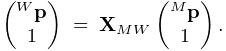

model matrix is the ![]() homogeneous affine transform

homogeneous affine transform

![]() from model to world coordinates, while the view matrix

is a

from model to world coordinates, while the view matrix

is a ![]() homogeneous rigid transform

homogeneous rigid transform ![]() from world to eye

coordinates. The composition of

from world to eye

coordinates. The composition of ![]() and

and ![]() transforms a

point from model coordinates

transforms a

point from model coordinates ![]() into eye coordinates

into eye coordinates ![]() ,

according to:

,

according to:

|

Note: the renderer assumes that points and vectors are column-based and the coordinate transforms work by pre-multiplying these column vectors. This is in constrast to some computer graphics conventions in which vectors are row based. Our transformation matrices are therefore the transpose of those defined with respect to a row-based convention.

Initially the world and model frames are coincident, so that ![]() . Rendering methods often redefine the model matrix, allowing

existing object geometry or built-in drawing methods to be used at

different scales and poses throughout the scene. The methods

available for querying and controlling the model matrix are described

in Section 2.3.8.

. Rendering methods often redefine the model matrix, allowing

existing object geometry or built-in drawing methods to be used at

different scales and poses throughout the scene. The methods

available for querying and controlling the model matrix are described

in Section 2.3.8.

Meanwhile, changing the view matrix allows the scene to be observed from different view points. A viewer provides the following direct methods for setting and querying the view matrix:

where

RigidTransform3d is defined

in maspack.matrix and represents a ![]() homogenous

rigid transformation.

Instead of specifying the view matrix, it is sometimes more convenient

to specify its inverse, the eye-to-world transform

homogenous

rigid transformation.

Instead of specifying the view matrix, it is sometimes more convenient

to specify its inverse, the eye-to-world transform ![]() .

This can be done with

.

This can be done with

where

Point3d and

Vector3d are also defined in

maspack.matrix and represent 3 dimensional points and vectors.

The method

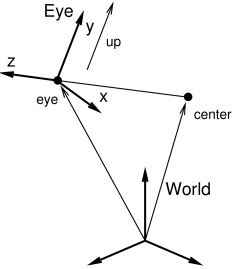

setEyeToWorld(eye,center,up) sets ![]() according to legacy

OpenGL conventions so that (with respect to world coordinates) the eye

frame’s origin is defined by the point eye, while its

orientation is defined such that the

according to legacy

OpenGL conventions so that (with respect to world coordinates) the eye

frame’s origin is defined by the point eye, while its

orientation is defined such that the ![]() axis points from eye

to center and the

axis points from eye

to center and the ![]() axis is parallel to up (see Figure

2.5).

axis is parallel to up (see Figure

2.5).

Point3d is a subclass of Vector3d used to describe points in space. The only difference between the two is that they transform differently in response to rigid transforms (described by RigidTransform3d) or affine transforms (described by AffineTransform3d): point transformations include the translational component, while vector transformations do not.

The viewer also maintains a center position and an up

vector that are used to modify ![]() in conjunction

with the following:

in conjunction

with the following:

Again with respect to world coordinates, setEye(eye) sets the origin of the eye frame while recomputing its orientation from the current values of center and up, while setCenter(center) and setUpVector(up) set center and up and recompute the eye frame’s orientation accordingly.

It is also possible to specify axis-aligned views, so that the axes of the eye frame are exactly aligned with the axes of the world frame. This can be done using

setAxialView() sets the rotational

component of ![]() to REW, and moves the eye position so

that the viewer’s center lies along the new

to REW, and moves the eye position so

that the viewer’s center lies along the new ![]() axis. It also

sets the up vector to the

axis. It also

sets the up vector to the ![]() axis of REW, and stores REW as the viewer’s nominal axial view which can be used for

determining default orientations for fixtures such as grids.

AxisAlignedRotation defines 24 possible

axis-aligned orientations, and so there are 24 possible axis-aligned

views. Some of those commonly used in association with setAxialView() are:

axis of REW, and stores REW as the viewer’s nominal axial view which can be used for

determining default orientations for fixtures such as grids.

AxisAlignedRotation defines 24 possible

axis-aligned orientations, and so there are 24 possible axis-aligned

views. Some of those commonly used in association with setAxialView() are:

| X_Y | eye frame and world frame have the same orientaion |

|---|---|

| X_Z | eye frame |

| Y_Z | eye frame |

There are several methods available to reset the viewing frustum:

The setPerspective methods create a perspective-based frustum

(Figure 2.3). The first methods explicitly sets

the left, right, bottom and top edges of the view plane, along with

the (positive) distances to the near (i.e., view) and far planes,

while the second method creates a frustum centered on the ![]() axis,

using a specified vertical field of view. The setOrthogonal

methods create an orthographic frustum (Figure 2.4)

in a similar manner.

axis,

using a specified vertical field of view. The setOrthogonal

methods create an orthographic frustum (Figure 2.4)

in a similar manner.

Information about the current frustum can be queried using

For convenience, the viewer can also automatically determine appropriate values for the center and eye positions, and then fit a suitable viewing frustum around the scene. This is done using the renderables’ updateBounds() method to estimate a scene center and radius, along with the current value of the up vector to orient the eye frame. The auto-fitting methods are:

These auto-fit methods also make use of a default vertical field-of-view, which is initially 35 degrees and which can be controlled using

Finally, the viewer’s background color can be controlled using

2.2.5 Lights

Viewers also maintain scene lighting. Typically, a viewer will be initialized to a default set of lights, which can then be adjusted by the application. The existing light set can be queried using the methods

where Light is a class

containing parameters for the light.

Lights are described using the same parameters as those of OpenGL,

as described in Chapter 5 of the OpenGL Programming Guide (Red book).

Each has a position ![]() and (unit) direction

and (unit) direction ![]() in space, a type, colors associated with ambient, specular and diffuse lighting,

and coefficients for its attenuation formula. The attenuation formula

is

in space, a type, colors associated with ambient, specular and diffuse lighting,

and coefficients for its attenuation formula. The attenuation formula

is

where ![]() is the light intensity,

is the light intensity, ![]() is the distance between the

light and the point being lit, and

is the distance between the

light and the point being lit, and ![]() ,

, ![]() , and

, and ![]() are the constant,

linear and quadratic attentuation factors.

are the constant,

linear and quadratic attentuation factors.

Spot lights have the same properties as other lights, in addition to

also having a cutoff angle ![]() and an exponent

and an exponent ![]() .

The cutoff angle is the angle between the direction of the light and

the edge of its cone, while the exponent, whose default value is 0, is

used to determine how focused the light is. If

.

The cutoff angle is the angle between the direction of the light and

the edge of its cone, while the exponent, whose default value is 0, is

used to determine how focused the light is. If ![]() is the unit

direction from the light to the point being lit, and if

is the unit

direction from the light to the point being lit, and if ![]() , then the point being lit is within

the light cone and the light intensity

, then the point being lit is within

the light cone and the light intensity ![]() in the above equation is

multiplied by the spotlight effect, given by

in the above equation is

multiplied by the spotlight effect, given by

Since ![]() , a value of

, a value of ![]() gives

the least light reductuon, while valuse of

gives

the least light reductuon, while valuse of ![]() increase the

intensity of the spot light towards its center.

increase the

intensity of the spot light towards its center.

Information for a specific light is provided by a Light object, which contains the following fields:

- enabled

-

A boolean describing whether or not the light is enabled.

- type

-

An instance of Light.LightType describing the type of the light. Current values are DIRECTIONAL, POINT, and SPOT.

- position

-

A 3-vector giving the position of the light in world coordinates.

- direction

-

A 3-vector giving the direction of the light in world coordinates.

- ambient

-

RGBA values for the ambient light color.

- diffuse

-

RGBA values for the diffuse light color.

- specular

-

RGBA values for the specular light color.

- constantAttenuation

-

Constant term C in the attenuation formula

- linearAttenuation

-

Linear term L in the attenuation formula

- quadraticAttenuation

-

Quadratic term Q in the attenuation formula

- spotCosCutoff

-

For spotlights, the cosine of the cutoff angle

.

. - spotExponent

-

For spotlights, the exponent

.

.

Each of these fields is associated with accessor methods inside Light.

An application can also add or remove lights using

To create a new light, the application instantiates a Light object, sets the appropriate fields, and then calls addLight(). Lights can be removed either by reference to the light object or its index.

Lights are updated by the viewer at the beginning of each repaint step. That means that for lights already added to the viewer, any changes made to the fields of the associated Light object will take effect at the beginning of the next repaint step.

2.3 The Renderer Interface

This section describes the Renderer interface, which supplies the methods which objects can use to draw themselves. This includes methods for setting graphics state, and drawing point, line and triangle primitives as well as simple solid shapes.

2.3.1 Drawing single points and lines

The most basic Renderer methods provide for the drawing of single points, lines, and triangles. The following can be used to draw a pixel-based point at a specified location pnt, or a pixel-based line segment between two points pnt0 and pnt1:

where, as mentioned earlier, Vector3d represents a 3-vector and is defined in maspack.matrix.

Note: while convenient for drawing small numbers of points and lines, the methods described in this section can be quite inefficient. For rendering larger numbers of primitives, one should use either the draw mode methods of Section 2.3.4, or the even more efficient render objects described in Section 2.4.

The size of the points or lines (in pixels) can be controlled via following methods:

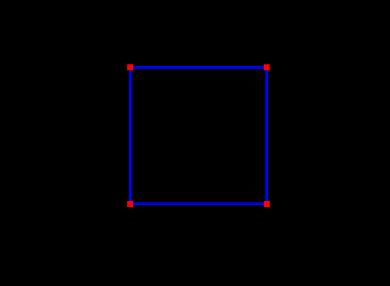

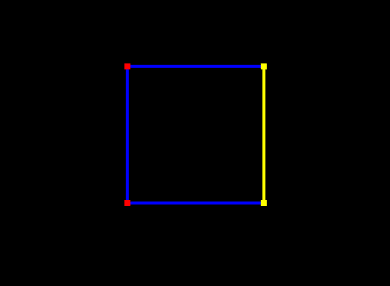

The following example draws a square in the x-z plane, with blue edges and red corners:

In addition to the draw methods described above, we use setShading() to disable and restore lighting (Section 2.3.6), and setColor() to set the point and edge colors (Section 2.3.3). It is generally necessary to disable lighting when drawing pixel-based points and lines which do not (as in this example) contain normal information. The result is shown in Figure 2.6.

For visualization and selection purposes, it is also possible to draw points and lines as solid spheres and cylinders; see the description of this in Section 2.3.1.

2.3.2 Drawing single triangles

Another pair of methods are available for drawing solid triangles:

Each of these draws a single triangle, with a normal computed automatically with respect to the counter-clockwise orientation of the vertices.

Note: as with drawing single points and lines, the single triangle methods are inefficient. For rendering large numbers of triangles, one should use either the draw mode methods of Section 2.3.4, or the render objects described in Section 2.4.

When drawing triangles, the renderer can be asked to draw the front face, back face, or both faces. The methods to control this are:

where FaceStyle is an enumerated type of Renderer with the possible values:

- FRONT:

-

Draw the front face

- BACK:

-

Draw the back face

- FRONT_AND_BACK:

-

Draw both faces

- NONE:

-

Draw neither face

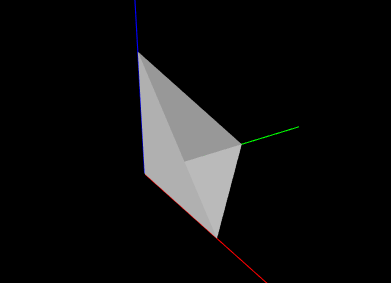

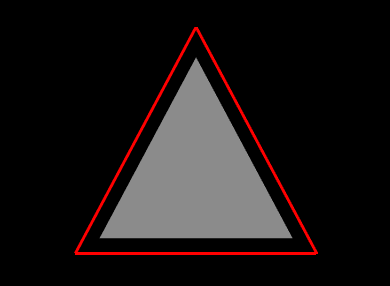

The example below draws a simple open tetrahedron with one face missing:

The result is drawn using the renderer’s default gray color, as seen in Figure 2.7.

2.3.3 Colors and associated attributes

The renderer maintains a set of attributes for controlling the color,

reflectance and emission characteristics of whatever primitives or

shapes are currently being drawn. Color values are stored as RGBA

(red, green, blue, alpha) or RGB (red, green, blue) values in the

range ![]() . The attributes closely follow the OpenGL model for

lighting materials and include:

. The attributes closely follow the OpenGL model for

lighting materials and include:

- Front color:

-

Specifies the reflected RGBA values for diffuse and ambient lighting. The default value is opaque gray:

.

. - Back color:

-

Optional attribute, which, if not null, specifies the reflected RGBA values for diffuse and ambient lighting for back faces only. Otherwise, the front color is used. The default value is null.

- Specular:

-

Specifies the reflected RGB values for specular lighting. The default value is

.

. - Emission:

-

Specifies the RGB values for emitted light. The default value is

.

. - Shininess:

-

Specifies the specular exponent of the lighting equation, in the range

![[0,128]](mi/mi84.png) . The default value is 32.

. The default value is 32.

The resulting appearance of subsequently rendered primitives or shapes depends on the values of these attributes along with the shading settings (Section 2.3.6). When lighting is disabled (by calling setShading(Shading.NONE)), then rendering is done in a uniform color using only the front color (diffuse/ambient) attribute.

The primary methods for setting the color attributes are:

where rgba and rgb are arrays of length 4 or 3 that provide the required RGBA or RGB values. The rgba arguments may also have a length of 3, in which case an alpha value of 1.0 is assumed. For setBackColor(), rgba may also be null, which will cause the back color to be cleared.

Most commonly, there is no difference between the desired front and back colors, in which case one can simply use the various setColor methods instead:

These take RGB or RGBA values and set the front color, while at the same time clearing the back color, so that the front color is automatically applied to back faces. The method setFrontAlpha() independently sets the alpha value for the front color.

To query the color attributes, one may use:

The first four of these return the relevant RGBA or RGB values as an array of floats. Applications may supply the float arrays using the arguments rgba or rgb; otherwise, if these arguments are null, the necessary float arrays will be allocated. If no back color is set, then getBackColor() will return null.

2.3.3.1 Highlighting

The renderer supports the notion of highlighting, which allows the application to indicate to the renderer that subsequently rendered components should be drawn in a highlighted manner. This is typically used to show (visually) that they are selected in some way.

The highlighting style used by the renderer can be queried using the method

At present, only two values of HighlightStyle are supported:

- COLOR:

-

Highlighting is done by rendering with a distinct color.

- NONE:

-

Highlighting is disabled.

The color used for color-based highlighting can be queried using

To enable or disable highlighting, the application can use the methods

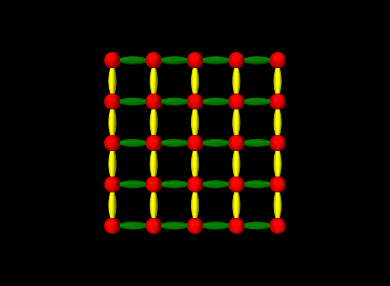

As an illustration, we alter the square drawing example of Section 2.3.1 to highlight the corners corresponding to points p1 and p1, as well as the edge between p1 and p1:

The result, assuming a highlight style of HighlightStyle.COLOR and a yellow highlight color, is shown in Figure 2.8.

2.3.4 Drawing using draw mode

For convenience, the renderer provides a draw mode in which primitive sets consisting of points, lines and triangles can be assembled by specifying a sequence of vertices and (if necessary) normals, colors, and/or texture coordinates between calls to beginDraw(mode) and endDraw(). Because draw mode allows vertex and normal information to be collected together and sent to the GPU all at one time (when endDraw() is called), it can be significantly more efficient than the single point, line and triangle methods described in the previous sections. (However, using render objects can be even more efficient, as described in Section 2.4.)

| DrawMode | Description | Equivalent OpenGL Mode |

|---|---|---|

| POINTS | A set of independent points | GL_POINTS |

| LINES | A set of line segments, with two vertices per segment | GL_LINES |

| LINE_STRIP | A line strip connecting the vertices in order | GL_LINE_STRIP |

| LINE_LOOP | A line loop connecting the vertices in order | GL_LINE_LOOP |

| TRIANGLES | A set of triangles, with three vertices per triangle | GL_TRIANGLES |

| TRIANGLE_STRIP | A triangle strip | GL_TRIANGLE_STRIP |

| TRIANGLE_FAN | A triangle fan | GL_TRIANGLE_FAN |

Draw mode is closely analgous to immediate mode in older OpenGL specifications. The types of primitive sets that may be formed from the vertices are defined by Renderer.DrawMode and summarized in Table 2.1. The primitive type is specified by the mode argument of the beginDraw(mode) call that initiates draw mode.

Between calls to beginDraw(mode) and endDraw(), vertices may be added using the methods

each of which creates and adds a single vertex for the specified point. Normals may be specified using

It is not necessary to specify a normal for each vertex. Instead, the first setNormal call will specify the normal for all vertices defined to that point, and all subsequent vertices until the next setNormal call. If no setNormal call is made while in draw mode, then the vertices will not be associated with any normals, which typically means that the primitives will be rendered as black unless lighting is disabled (Section 2.3.6).

It is also possible to specify per-vertex colors during draw mode. This can be done by calling any of the methods of Section 2.3.3 that cause the front color to be set. The indicated front color will then be assigned to vertices defined up to that point, and all subsequent vertices until the next call that sets the front color. The primitives will then be rendered using vertex coloring, in which the vertex color values are interpolated to determine the color at any given point in a primitive. This color overrides the current front (or back) color value (or mixes with it; see Section 2.3.7). If vertex colors are not specified, then the primitives will be rendered using the color attributes that were in effect when draw mode was first entered.

Finally, per-vertex texture coordinates can be specified within draw mode. The methods for doing this are analagous to those for setting normals,

where Vector2d is defined in maspack.matrix. Texture coordinates are required for any rendering that involves texture mapping, including color, normal or bump maps (Section 2.5).

When draw mode is exited by calling endDraw(), the specified vertices, along with any normal, color or texture information, is sent to the GPU and rendered as the specified primitive set, using the current settings for shading, point size, line width, etc.

As an example, the code below uses draw mode to implement the square drawing of Section 2.3.1 (which is shown in Figure 2.6):

Note that no normals need to be specified since both primitive sets are rendered with lighting disabled.

Another example uses draw mode to implement the partial tetrahedron example from Section 2.3.2 (which is shown in Figure 2.7):

Note that because for this example we are displaying shaded faces, it is necessary to specify a normal for each triangle.

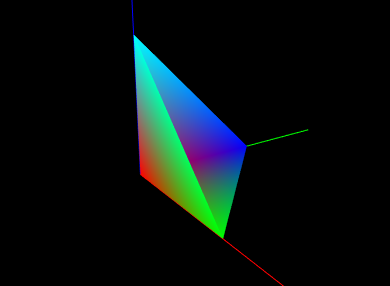

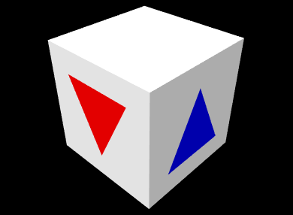

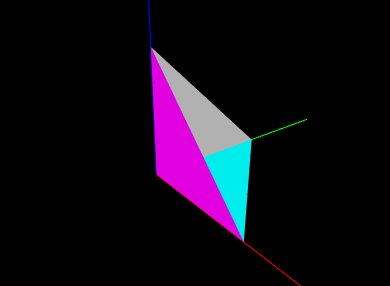

As a final example, we show the tetrahedon example again, but this time with colors specified for each vertex, which initiates vertex coloring. Vertices p0, p1, p2, and p3 are associated with the colors RED, GREEN, BLUE, and CYAN, respectively. The corresponding code looks like this:

and the rendered result is shown in Figure 2.9.

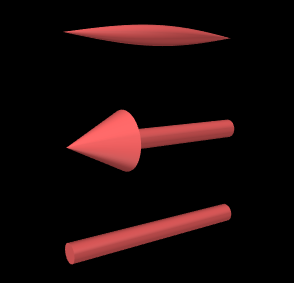

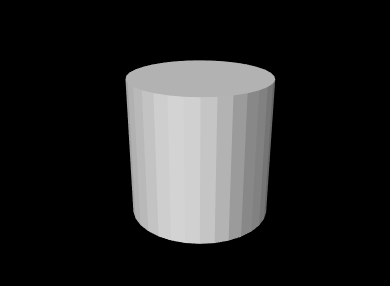

2.3.5 Drawing solid shapes

For convenience, the renderer provides a number of methods for drawing solid shapes. These include spheres, cylinders, cubes, boxes, arrows, spindles, cones, and coordinate axes.

Methods for drawing spheres include

both of which draw a sphere with radius rad centered at the point pnt, using the current color and shading settings. For drawing cylinders, arrows, or spindles, one can use

each of which draws the indicated shape between points pnt0 and pnt1 with a cylindrical radius of rad, again using the current color and shading. The argument capped for cylinders and arrows indicates whether or not a solid cap should be drawn over any otherwise open ends. For arrows, the arrow head size is based on the radius and line segment length. Another method,

draws an arrow starting at pnt and extending in the direction dir, with a length given by the length of dir times scale.

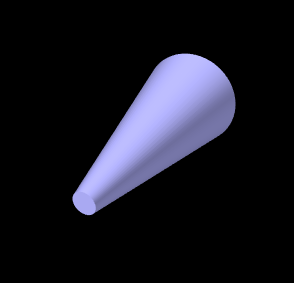

A cone can be drawn similarly to a cylinder, using

with the only difference being that there are now two radii, rad0 and rad1, at each end.

|

|

|

To draw cubes and boxes, one may use

The drawCube methods draw an axis-aligned cube with a specified width centered on the point pnt. Similarly, the first two drawBox methods draw an axis-aligned box with the indicated x, y, and z widths. Finally, the last drawBox method draws a box centered on, and aligned with, the coordinate frame defined (with respect to model coordinates) by the RigidTransform3d TBM.

When rendering the curved solids described above, the renderer must create surface meshes that approximate their shapes. The resolution used for doing this can be controlled using a parameter called the surface resolution. This is defined to be the number of line segments that would be used to approximate a circle, and this level of resolution is then employed to create the mesh. The renderer initializes this parameter to a reasonable default value, but applications can query or modify it as needed using the following methods:

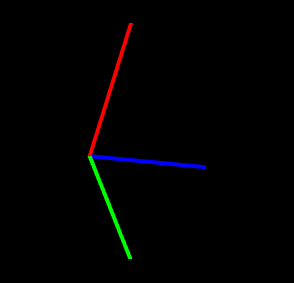

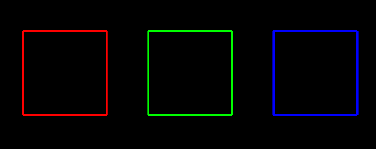

Coordinate axes can be drawn to show the position and orientation of a spatial coordinate frame:

For these, the coordinate frame is described (with respect to the current

model coordinates) by a

RigidTransform3d T. The first method

draws the frame’s axes

as lines with the specified length len and width. The second method allows different lengths (lens) to be

specified for each axis. The axis lines are rendered using regular

pixel-based lines with non-shaded colors, with the ![]() ,

, ![]() , and

, and ![]() axes normally being colored red, green, and blue.

However, if highlight is true and the highlight

style is

HighlightStyle.COLOR

(Section 2.3.3.1), then all axes are drawn

using the using the highlight color.

axes normally being colored red, green, and blue.

However, if highlight is true and the highlight

style is

HighlightStyle.COLOR

(Section 2.3.3.1), then all axes are drawn

using the using the highlight color.

Some of the solids are illustrated in Figure 2.10.

2.3.6 Shading and color mixing

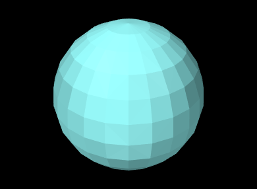

Shading determines the coloring of each rendering primitive (point, line or triangle), as seen from the eye, as a result of its color attributes, surface normals and the current lighting conditions. At any given point on a primitive, the rendered color is the coloring seen from the eye that results from the incident illumination, color attributes, and surface normal at that point. In general, the rendered color varies across the primitive. How this variation is handled depends on the shading, defined by Renderer.Shading:

- FLAT:

-

The rendered color is determined at the first vertex and applied to the entire primitive. This makes it easy to see the individual primitives, which can be desirable under some circumstances. Only one normal needs to be specified per primitive.

- SMOOTH:

-

Rendered colors are computed across the primitive, based on interpolated normal information, resulting in a smooth appearance. The interpolation technique depends on the renderer. OpenGL 2 implementations use Gouraud shading, while the OpenGL 3 renderer uses Phong shading.

- METAL:

-

Rendered colors are computed using a smooth shading technique that may be more appropriate to metalic objects. For some renderer implementations, there may be no difference between METAL and SMOOTH.

- NONE:

-

Lighting is disabled. The rendered color becomes the diffuse color, which is applied uniformly across the primitive. No normals need to be specified.

|

|

|

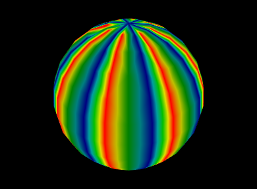

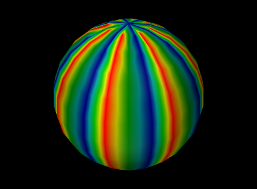

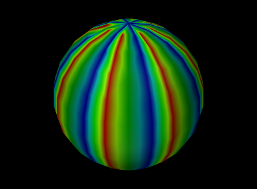

Figure 2.11 shows different shading methods applied to a sphere.

The shading can be controlled and queried using the following methods,

where setShading() returns the previous shading setting.

Lighting is often disabled, using Shading.NONE, when rendering pixel-based points and lines. That’s because normal information is not naturally defined for these primitives, and also because even if normal information were to be provided, shading could make them either invisible or hard to see from certain viewing angles.

2.3.7 Vertex coloring, and color mixing and interpolation

As mentioned in Section 2.3.4, it is possible to specify vertex coloring for a primtive, in which vertex color values are interpolated to determine the color at any given point in the primitive. Vertex colors can be specified by calling setColor primitives while in draw mode. They can also be specified as part of a RenderObject (Section 2.4).

When vertex coloring is used, the interpolated vertex colors either replace or are combined with the current front (or back) diffuse color at each point in the primitive. Other color attributes, such as emission and specular, are unchanged. If lighting is disabled, then the rendered color is simply set to the resulting vertex/diffuse color combination.

Whether the vertex color replaces or combines with the underlying diffuse color is controlled by the enumerated type Renderer.ColorMixing, which has four different values:

| REPLACE | replace the diffuse color (default behavior) |

| MODULATE | multiplicatively combine with the diffuse color |

| DECAL | combine with the diffuse color based on the latter’s alpha value |

| NONE | diffuse color is unchanged (vertex colors are ignored) |

|

|

|

Color mixing can be controlled using these methods:

A given Renderer implementation may not support all color mixing modes, and so the hasVertexColorMixing() can be used to query if a given mixing mode is supported. The OpenGL 2 renderer implementation does not support MODULATE or DECAL. Some examples of vertex coloring with different shading and color mixing settings are shown in Figure 2.12.

The renderer’s default color mixing mode is MODULATE. This has the advantage of allowing rendered objects to still appear differently when highlighting is enabled and the highlight style is HighlightStyle.COLOR (Section 2.3.3.1), since the highlight color is combined with the vertex color rather than being replaced by it.

|

|

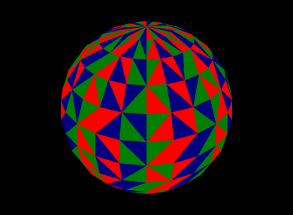

Vertex coloring can be used in different ways. Assigning different colors to the vertices of a primitive will result in a blending of those colors within the primitive (Figures 2.9 and 2.12). Assigning the same colors to the vertices of a primitive can be used to give each primitive a uniform color. Figure 2.13 shows vertex coloring applied to the same sphere as Figures 2.3.6 and 2.12, only with the vertices for each face being uniformly set to red, green or blue, resulting in uniformly colored faces.

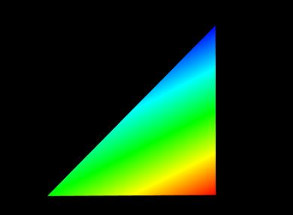

|

|

When using vertex coloring, the interpolation of colors across the primitive can be done either in RBG or HSV space. HSV stands for hue, saturation, and value (or brightness), and it is often the best interpolation method to use when the vertex colors have a uniform brightness that the interpolation should preserve. This leads to a “rainbow” look that is common in situations like color-based stress plots. Figure 2.14 illustrates the difference between RGB and HSV interpolation.

Color interpolation is specified with the enumerated type Renderer.ColorInterpolation, which currently has the two values RGB and HSV. Within the renderer, it can be controlled using the methods

2.3.8 Changing the model matrix

When point positions are specified to the renderer, either as the arguments to various draw methods, or for specifying vertex locations in draw mode, the positions are assumed to be defined with respect to the current model coordinate frame. As described in Section 2.2.4, this is one of the three primary coordinate frames associated with the viewer, with the other two being the world and eye frames.

The relationship between model and world frames is controlled by the

model matrix ![]() , which is a

, which is a ![]() homogeneous

affine transform that transforms points in model coordinates (denoted

by

homogeneous

affine transform that transforms points in model coordinates (denoted

by ![]() ) to world coordinates (denoted by

) to world coordinates (denoted by ![]() ), according to

), according to

|

Initially the world and model frames are coincident, so that ![]() . Rendering methods often redefine the model matrix, allowing

object geometry to be specified in a conveniently defined local

coordinate frame, and, more critically, allowing the predefined

geometry associated with existing rendering objects (Section

2.4) or built-in drawing methods to be used at

different scales and poses throughout the scene. Methods for querying

and controlling the model matrix include:

. Rendering methods often redefine the model matrix, allowing

object geometry to be specified in a conveniently defined local

coordinate frame, and, more critically, allowing the predefined

geometry associated with existing rendering objects (Section

2.4) or built-in drawing methods to be used at

different scales and poses throughout the scene. Methods for querying

and controlling the model matrix include:

Both getModelMatrix() and

getModelMatrix(XMW) return the current model matrix value (where the

value returned by the first method should not be modified).

AffineTransform3dBase is a base class defined

in maspack.matrix and represents a ![]() homogeneous transform

that is either a rigid transform

(of type

RigidTransform3d) or an affine transform

(of type AffineTransform3d).

setModelMatrix(XMW)

explicitly sets the model matrix, while

mulModelMatrix(X)

post-multiplies the current matrix by another rigid or

affine transform

homogeneous transform

that is either a rigid transform

(of type

RigidTransform3d) or an affine transform

(of type AffineTransform3d).

setModelMatrix(XMW)

explicitly sets the model matrix, while

mulModelMatrix(X)

post-multiplies the current matrix by another rigid or

affine transform ![]() , which is equivalent to

setting

, which is equivalent to

setting

translateModelMatrix(tx,ty,tz) and

rotateModelMatrix(zdeg,ydeg,xdeg) translate or rotate the model

frame by post-multiplying the model matrix by a rigid transform

describing either a translation (tx, ty, tz), or

a rotation formed by three successive rotations: zdeg degrees

about the ![]() axis, ydeg degrees about the new

axis, ydeg degrees about the new ![]() axis, and

finally xdeg degrees about the new

axis, and

finally xdeg degrees about the new ![]() axis.

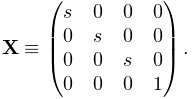

scaleModelMatrix(s)

scales the current model frame by

post multiplying the model matrix by a uniform scaling transform

axis.

scaleModelMatrix(s)

scales the current model frame by

post multiplying the model matrix by a uniform scaling transform

|

Finally, pushModelMatrix() and popModelMatrix() save and restore the model matrix from an internal stack. It is common to wrap changes to the model matrix inside calls to pushModelMatrix() and popModelMatrix() so that the model matrix is preserved unchanged for subsequent use elsewhere:

2.3.9 Render properties and RenderProps

The maspack.render package defines an object called RenderProps which encapsulates many of the properties that are needed to describe how an oject should be rendered. These properties control the color, size, and style of the three primary rendering primitives: faces, lines, and points, and all are exposed using the maspack.properties package, so that they can be easily set from a GUI or inherited from ancestor components.

A renderable object can maintain its own RenderProps object, and use the associated properties as it wishes to control rendering from within its render() method. Objects maintaining their own RenderProps can declare this by implementing the HasRenderProps interface, which declares the methods

It is not intended for RenderProps to encapsulate all properties relevant to the rendering of objects, but only those which are commonly encountered. Any particular renderable may still need to define and maintain more specialized rendering properties.

Renderable objects that implement both HasRenderProps and IsSelectable (an extension of IsRenderable for selectable objects described in Section 2.7) are identified by the combined interface Renderable.

2.3.9.1 Drawing points and lines as 3D solid objects

RenderProps contains two properties, pointStyle and lineStyle, that indicate whether points and lines should be drawn using standard pixel-based primitives or some type of solid 3D geometry. Often, the latter can be preferable for visualization and graphical selection. pointStyle and lineStyle are described by the enumerated types Renderer.PointStyle and Renderer.LineStyle, respectively, which contain the following entries:

| PointStyle: | |

|---|---|

| POINT | pixel-based point |

| SPHERE | solid sphere |

| CUBE | solid cube |

| LineStyle: | |

| LINE | pixel-based line |

| CYLINDER | solid cylinder |

| SOLID_ARROW | solid arrow |

| SPINDLE | spindle (an ellipsoid tapered at each end) |

The size (in pixels) for pixel-based points is controlled by the property pointSize, whereas the radius for spherical points and half-width for cubic points is controlled by pointRadius. Likewise, the width (in pixels) for pixel-based lines is controlled by lineWidth, whereas the radii for lines rendered as cylinders, arrows or spindles is controlled by lineRadius.

2.3.9.2 RenderProps taxonomy

All of the RenderProps properties are listed in table 2.2. Values for the shading, faceStyle, lineStyle and pointStyle properties are defined using the following enumerated types: Renderer.Shading, Renderer.FaceStyle, Renderer.PointStyle, and Renderer.LineStyle. Colors are specified using java.awt.Color.

| property | purpose | default value |