|

|

||

| Menu | VisualVoice / Step6 | |

|

About Artistic Vision People Teams Contact us Publications Media Images/Movies Opportunities Related Links |

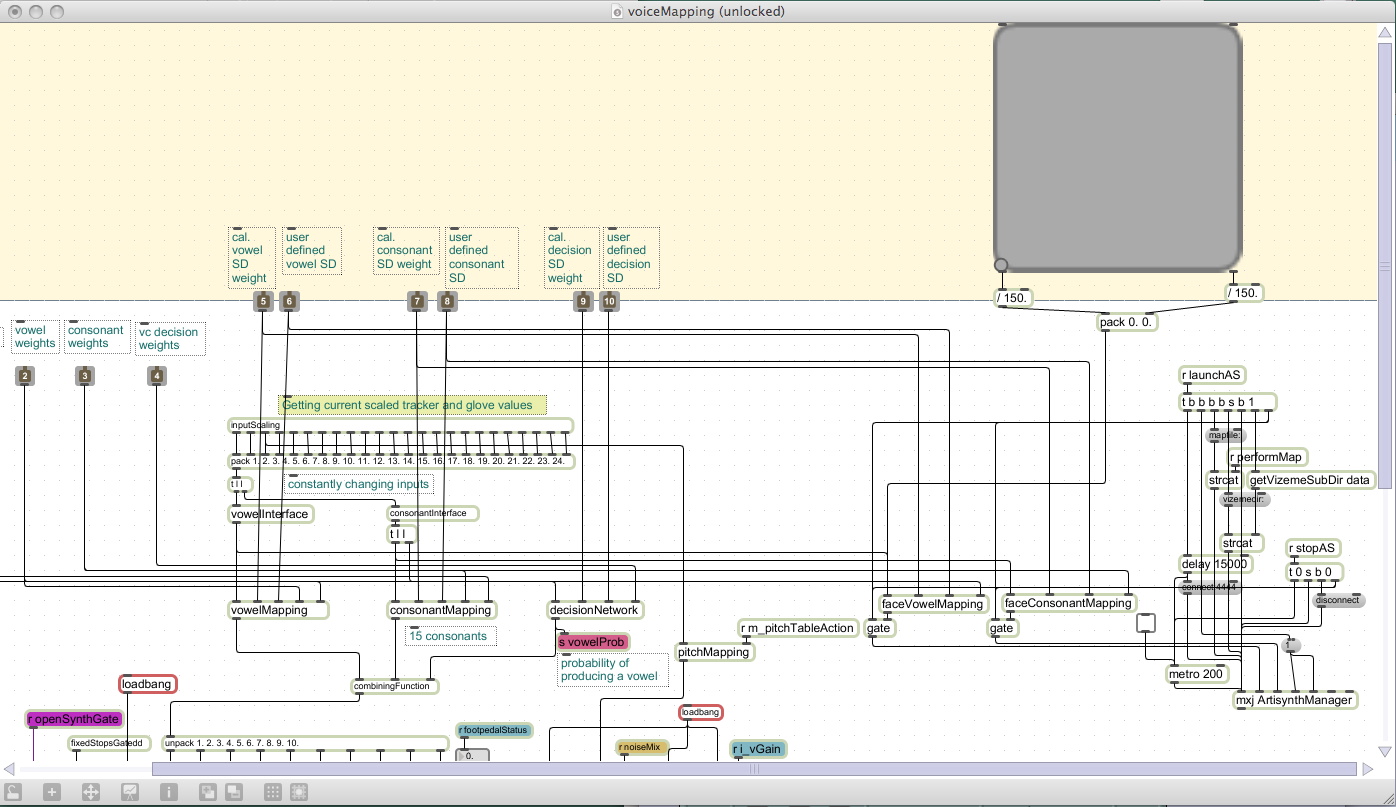

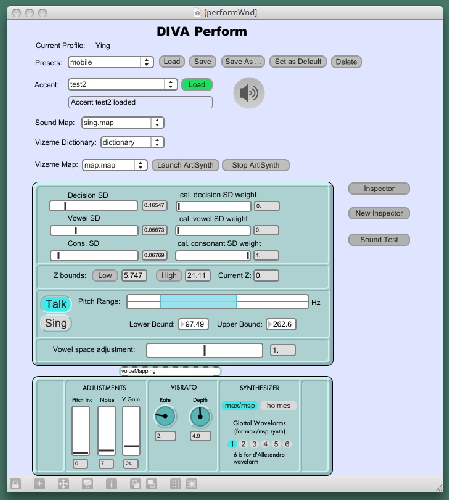

Running the Talking Face in DIVA Performance ModeThe final step of implementation is largely a linking process, and consists of making the following adjustments: i) Modify the object sendToFace from step three as follows: At this point, the user should be able to start the system in performance mode, choose a map file, launch artisynth, and a talking face should appear which moves in sync with speech output as the user performs. The MXJ object, in this context, is more appropriately named ArtisynthManager, since it is responsible for all actions related to Artisynth in the DIVA project. Its implementation in the patcher "voiceMapping" is shown below:  The object "Artisynth Manager" is shown at bottom right. Note that as in step 4, an x-y space is provided for the user, simulating the performance vowel space. The updated performance window is here displayed, with additional "Vizeme Dictionary" and "Vizeme map" menus, and buttons for launching and stopping artisynth.  |

|

| View Edit Attributes History Attach Print Search Page last modified on August 20, 2008, at 03:28 PM | ||