ArtiSynth Modeling Guide

Last update: Apr 10, 2017

Contents:

- 1 ArtiSynth Overview

- 2 Supporting classes

- 3 Mechanical Models I

- 4 Mechanical Models II

- 5 Simulation Control

- 6 Finite Element Models

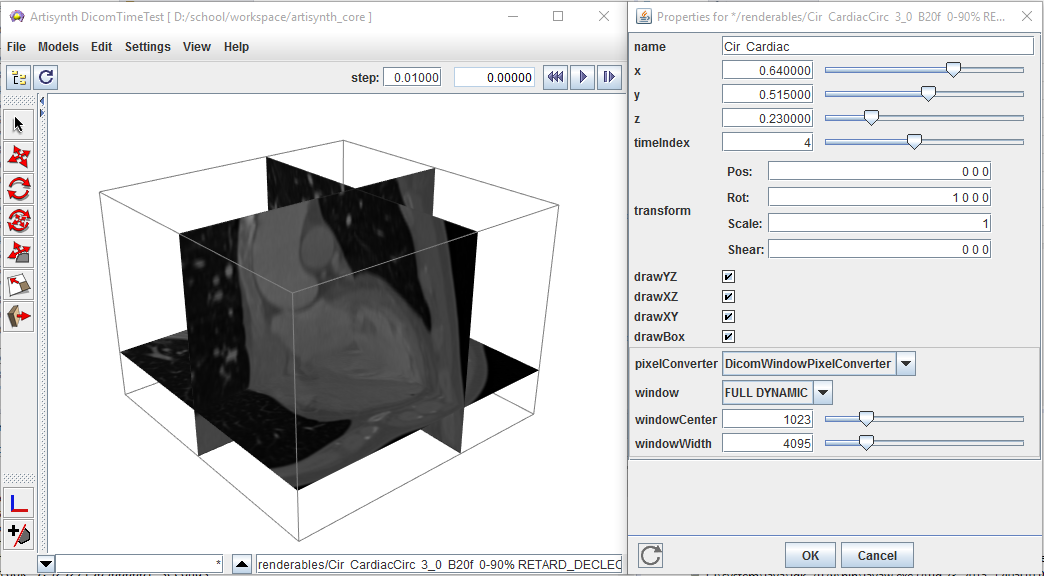

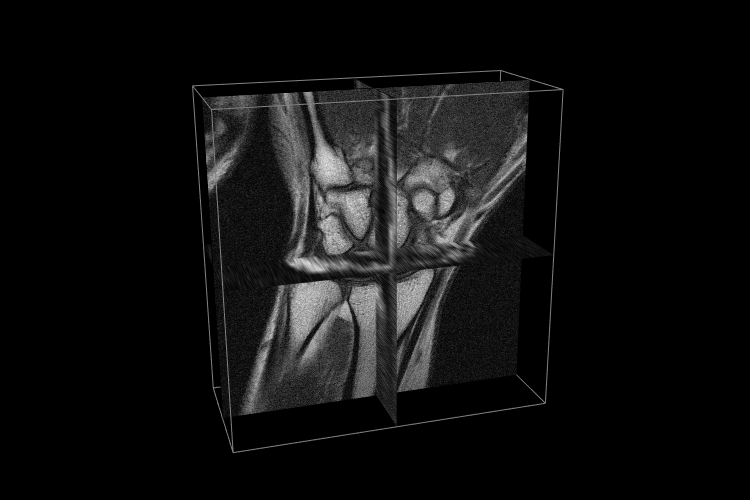

- 7 DICOM Images

- A Mathematical Review

Preface

This guide describes how to create mechanical and biomechanical models in ArtiSynth using its Java API.

It is assumed that the reader is familiar with basic Java programming, including variable assignment, control flow, exceptions, functions and methods, object construction, inheritance, and method overloading. Some familiarity with the basic I/O classes defined in java.io.*, including input and output streams and the specification of file paths using File, as well as the collection classes ArrayList and LinkedList defined in java.util.*, is also assumed.

How to read this guide

Section 1 offers a general overview of ArtiSynth’s software design, and briefly describes the algorithms used for physical simulation (Section 1.2). The latter section may be skipped on first reading. A more comprehensive overview paper is available online.

The remainder of the manual gives details instructions on how to build various types of mechanical and biomechanical models. Sections 3 and 4 give detailed information about building general mechanical models, involving particles, springs, rigid bodies, joints, constraints, and contact. Section 5 describes how to add control panels, controllers, and input and output data streams to a simulation. Section 6 describes how to incorporate finite element models. The required mathematics is reviewed in Section A.

If time permits, the reader will profit from a top-to-bottom read. However, this may not always be necessary. Many of the sections contain detailed examples, all of which are available in the package artisynth.demos.tutorial and which may be run from ArtiSynth using Models > All demos > tutorials. More experienced readers may wish to find an appropriate example and then work backwards into the text and preceeding sections for any needed explanatory detail.

Chapter 1 ArtiSynth Overview

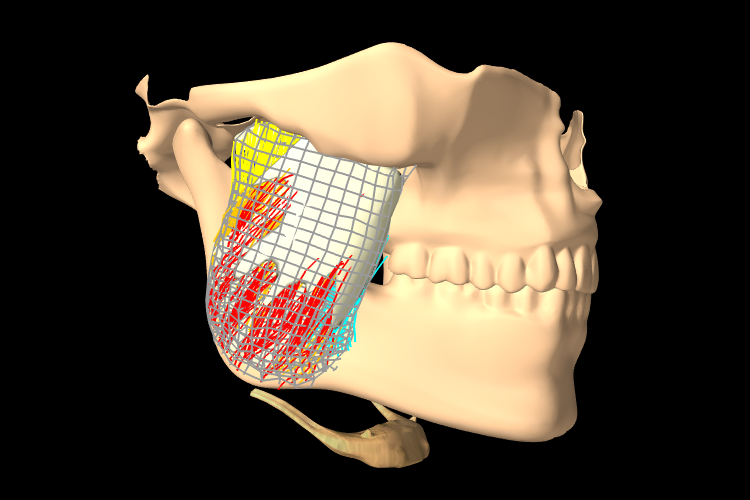

ArtiSynth is an open-source, Java-based system for creating and simulating mechanical and biomechanical models, with specific capabilities for the combined simulation of rigid and deformable bodies, together with contact and constraints. It is presently directed at application domains in biomechanics, medicine, physiology, and dentistry, but it can also be applied to other areas such as traditional mechanical simulation, ergonomic design, and graphical and visual effects.

1.1 System structure

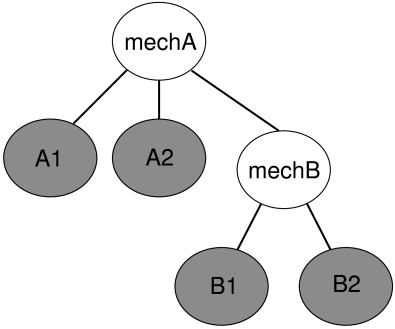

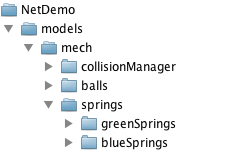

An ArtiSynth model is composed of a hierarchy of models and model components which are implemented by various Java classes. These may include sub-models (including finite element models), particles, rigid bodies, springs, connectors, and constraints. The component hierarchy may be in turn connected to various agent components, such as control panels, controllers and monitors, and input and output data streams (i.e., probes), which have the ability to control and record the simulation as it advances in time. Agents are presented in more detail in Section 5.

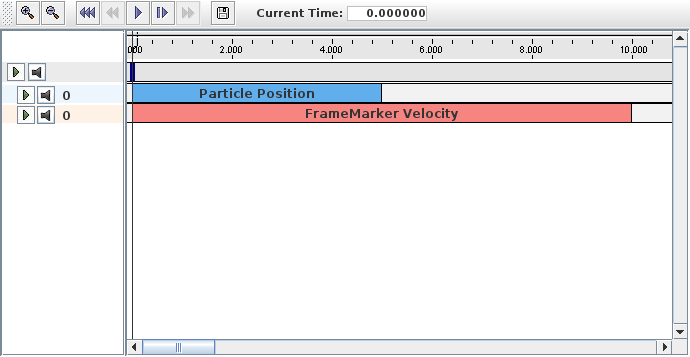

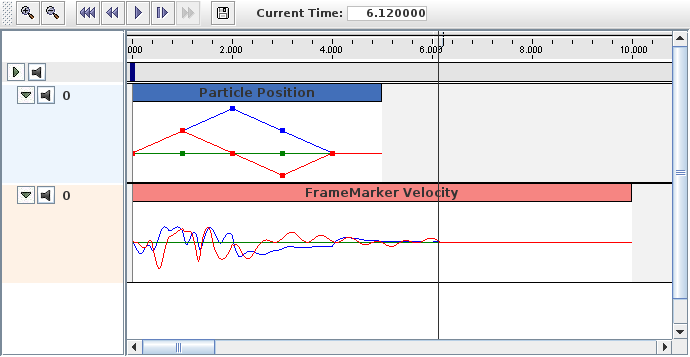

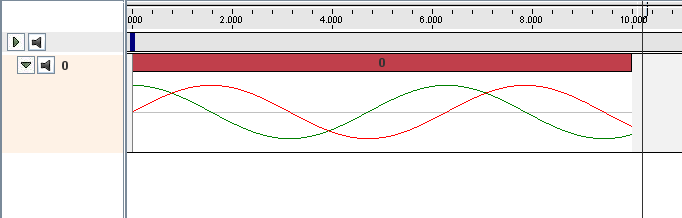

The models and agents are collected together within a top-level component known as a root model. Simulation proceeds under the control of a scheduler, which advances the models through time using a physics simulator. A rich graphical user interface (GUI) allows users to view and edit the model hierarchy, modify component properties, and edit and temporally arrange the input and output probes using a timeline display.

1.1.1 Model components

Every ArtiSynth component is an instance of ModelComponent. When connected to the hierarchy, it is assigned a unique number relative to its parent; the parent and number can be obtained using the methods getParent() and getNumber(), respectively. Components may also be assigned a name (using setName()) which is then returned using getName().

A sub-interface of ModelComponent includes CompositeComponent, which contains child components. A ComponentList is a CompositeComponent which simply contains a list of other components (such as particles, rigid bodies, sub-models, etc.).

Components which contain state information (such as position and velocity) should extend HasState, which provides the methods getState() and setState() for saving and restoring state.

A Model is a sub-interface of CompositeComponent and HasState that contains the notion of advancing through time and which implements this with the methods initialize(t0) and advance(t0, t1, flags), as discussed further in Section 1.1.4. The most common instance of Model used in ArtiSynth is MechModel (Section 1.1.5), which is the top-level container for a mechanical or biomechanical model.

1.1.2 The RootModel

The top-level component in the hierarchy is the root model, which is a subclass of RootModel and which contains a list of models along with lists of agents used to control and interact with these models. The component lists in RootModel include:

Each agent may be associated with a specific top-level model.

1.1.3 Component path names

The names and/or numbers of a component and its ancestors can be used to form a component path name. This path has a construction analogous to Unix file path names, with the ’/’ character acting as a separator. Absolute paths start with ’/’, which indicates the root model. Relative paths omit the leading ’/’ and can begin lower down in the hierarchy. A typical path name might be

/models/JawHyoidModel/axialSprings/lad

For nameless components in the path, their numbers can be used instead. Numbers can also be used for components that have names. Hence the path above could also be represented using only numbers, as in

/0/0/1/5

although this would most likely appear only in machine-generated output.

1.1.4 Model advancement

ArtiSynth simulation proceeds by advancing all of the root model’s top-level models through a sequence of time steps. Every time step is achieved by calling each model’s advance() method:

This method advances the model from time t0 to time t1, performing whatever physical simulation is required (see Section 1.2). The method may optionally return a StepAdjusment indicating that the step size (t1 - t0) was too large and that the advance should be redone with a smaller step size.

The root model has it’s own advance(), which in turn calls the advance method for all of the top-level models, in sequence. The advance of each model is surrounded by the application of whatever agents are associated with that model. This is done by calling the agent’s apply() method:

Agents not associated with a specific model are applied before (or after) the advance of all other models.

More precise details about model advancement are given in the ArtiSynth Reference Manual.

1.1.5 MechModel

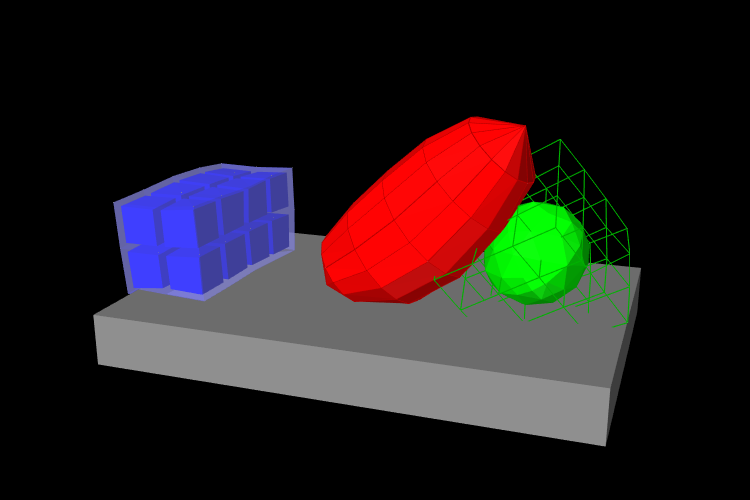

Most ArtiSynth applications contain a single top-level model which is an instance of MechModel. This is a CompositeComponent that may (recursively) contain an arbitrary number of mechanical components, including finite element models, other MechModels, particles, rigid bodies, constraints, attachments, and various force effectors. The MechModel advance() method invokes a physics simulator that advances these components forward in time (Section 1.2).

For convenience each MechModel contains a number of predefined containers for different component types, including:

Each of these is a child component of MechModel and is implemented as a ComponentList. Special methods are provided for adding and removing items from them. However, applications are not required to use these containers, and may instead create any component containment structure that is appropriate. If not used, the containers will simply remain empty.

1.2 Physics simulation

Only a brief summary of ArtiSynth physics simulation is described here. Full details are given in [5] and in the related overview paper.

For purposes of physics simulation, the components of a MechModel are grouped as follows:

- Dynamic components

-

Components, such as a particles and rigid bodies, that contain position and velocity state, as well as mass. All dynamic components are instances of the Java interface DynamicComponent. - Force effectors

-

Components, such as springs or finite elements, that exert forces between dynamic components. All force effectors are instances of the Java interface ForceEffector. - Constrainers

-

Components that enforce constraints between dynamic components. All constrainers are instances of the Java interface Constrainer. - Attachments

-

Attachments between dynamic components. While technically these are constraints, they are implemented using a different approach. All attachment components are instances of DynamicAttachment.

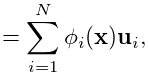

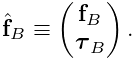

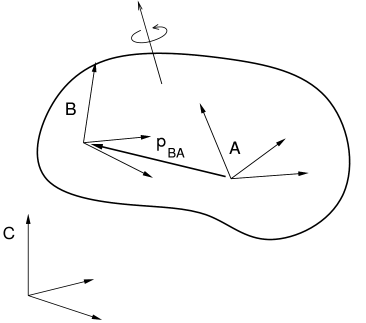

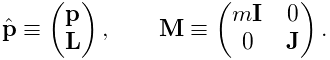

The positions, velocities, and forces associated with all the

dynamic components are denoted by the composite vectors

![]() ,

, ![]() , and

, and ![]() .

In addition, the composite mass matrix is given by

.

In addition, the composite mass matrix is given by

![]() .

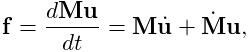

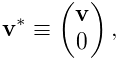

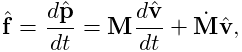

Newton’s second law then gives

.

Newton’s second law then gives

|

(1.1) |

where the ![]() accounts for various ``fictitious'' forces.

accounts for various ``fictitious'' forces.

Each integration step involves solving for

the velocities ![]() at time step

at time step ![]() given the velocities and forces

at step

given the velocities and forces

at step ![]() . One way to do this is to solve the expression

. One way to do this is to solve the expression

| (1.2) |

for ![]() , where

, where ![]() is the step size and

is the step size and

![]() . Given the updated velocities

. Given the updated velocities ![]() , one can

determine

, one can

determine ![]() from

from

| (1.3) |

where ![]() accounts for situations (like rigid bodies) where

accounts for situations (like rigid bodies) where ![]() , and then solve for the updated positions using

, and then solve for the updated positions using

| (1.4) |

(1.2) and (1.4) together comprise a simple symplectic Euler integrator.

In addition to forces, bilateral and unilateral constraints give rise to

locally linear constraints on ![]() of the form

of the form

| (1.5) |

Bilateral constraints may include rigid body joints, FEM

incompressibility, and point-surface constraints, while unilateral

constraints include contact and joint limits. Constraints give rise

to constraint forces (in the directions ![]() and

and ![]() )

which supplement the forces of (1.1) in order to enforce

the constraint conditions. In addition, for unilateral constraints,

we have a complementarity condition in which

)

which supplement the forces of (1.1) in order to enforce

the constraint conditions. In addition, for unilateral constraints,

we have a complementarity condition in which ![]() implies no

constraint force, and a constraint force implies

implies no

constraint force, and a constraint force implies ![]() . Any

given constraint usually involves only a few dynamic components and so

. Any

given constraint usually involves only a few dynamic components and so

![]() and

and ![]() are generally sparse.

are generally sparse.

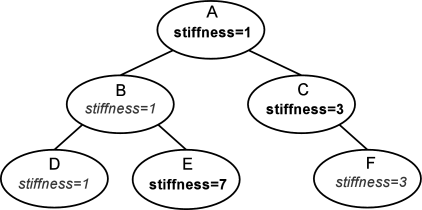

Adding constraints to the velocity solve (1.2) leads to a mixed linear complementarity problem (MLCP) of the form

|

|||

| (1.6) |

where ![]() is a slack variable,

is a slack variable, ![]() and

and ![]() give the force

constraint impulses over the time step, and

give the force

constraint impulses over the time step, and ![]() and

and ![]() are derivative

terms arising if

are derivative

terms arising if ![]() and

and ![]() are time varying. In addition,

are time varying. In addition,

![]() and

and ![]() are

are ![]() and

and ![]() augmented with stiffness

and damping terms terms to accommodate implicit integration, which

is often required for problems involving deformable bodies.

augmented with stiffness

and damping terms terms to accommodate implicit integration, which

is often required for problems involving deformable bodies.

Attachments can be implemented by constraining the velocities of the attached components using special constraints of the form

| (1.7) |

where ![]() and

and ![]() denote the velocities of the attached and

non-attached components. The constraint matrix

denote the velocities of the attached and

non-attached components. The constraint matrix ![]() is

sparse, with a non-zero block entry for each master component to

which the attached component is connected. The simplest case involves

attaching a point

is

sparse, with a non-zero block entry for each master component to

which the attached component is connected. The simplest case involves

attaching a point ![]() to another point

to another point ![]() , with the simple velocity relationship

, with the simple velocity relationship

| (1.8) |

That means that ![]() has a single entry of

has a single entry of ![]() (where

(where ![]() is the

is the ![]() identity matrix) in the

identity matrix) in the ![]() -th block column.

Another common case involves connecting a point

-th block column.

Another common case involves connecting a point ![]() to

a rigid frame

to

a rigid frame ![]() . The velocity relationship for this is

. The velocity relationship for this is

| (1.9) |

where ![]() and

and ![]() are the translational and rotational

velocity of the frame and

are the translational and rotational

velocity of the frame and ![]() is the location of the point relative

to the frame’s origin (as seen in world coordinates). The corresponding

is the location of the point relative

to the frame’s origin (as seen in world coordinates). The corresponding

![]() contains a single

contains a single ![]() block entry of the form

block entry of the form

| (1.10) |

in the ![]() block column, where

block column, where

![[l]\equiv\left(\begin{matrix}0&-l_{z}&l_{y}\\

l_{z}&0&-l_{x}\\

-l_{y}&l_{x}&0\end{matrix}\right)](mi/mi17.png) |

(1.11) |

is a skew-symmetric cross product matrix.

The attachment constraints ![]() could be added directly to

(1.6), but their special form allows us to

explicitly solve for

could be added directly to

(1.6), but their special form allows us to

explicitly solve for ![]() , and hence reduce the size of

(1.6), by factoring out the attached velocities

before solution.

, and hence reduce the size of

(1.6), by factoring out the attached velocities

before solution.

The MLCP (1.6) corresponds to a single step integrator. However, higher order integrators, such as Newmark methods, usually give rise to MLCPs with an equivalent form. Most ArtiSynth integrators use some variation of (1.6) to determine the system velocity at each time step.

To set up (1.6), the MechModel component

hierarchy is traversed and the methods of the different component

types are queried for the required values. Dynamic components (type

DynamicComponent) provide ![]() ,

, ![]() , and

, and ![]() ; force effectors

(ForceEffector) determine

; force effectors

(ForceEffector) determine ![]() and the stiffness/damping

augmentation used to produce

and the stiffness/damping

augmentation used to produce ![]() ; constrainers (Constrainer) supply

; constrainers (Constrainer) supply ![]() ,

, ![]() ,

, ![]() and

and ![]() , and attachments (DynamicAttachment) provide the information needed to factor out

attached velocities.

, and attachments (DynamicAttachment) provide the information needed to factor out

attached velocities.

1.3 Basic packages

The core code of the ArtiSynth project is divided into three main packages, each with a number of sub-packages.

1.3.1 maspack

The packages under maspack contain general computational utilities that are independent of ArtiSynth and could be used in a variety of other contexts. The main packages are:

1.3.2 artisynth.core

The packages under artisynth.core contain the core code for ArtiSynth model components and its GUI infrastructure.

1.3.3 artisynth.demos

These packages contain demonstration models that illustrate ArtiSynth’s modeling capabilities:

1.4 Properties

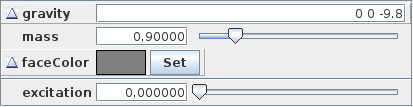

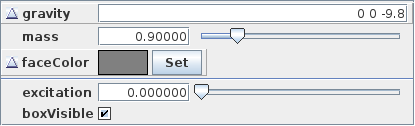

ArtiSynth components expose properties, which provide a uniform interface for accessing their internal parameters and state. Properties vary from component to component; those for RigidBody include position, orientation, mass, and density, while those for AxialSpring include restLength and material. Properties are particularly useful for automatically creating control panels and probes, as described in Section 5. They are also used for automating component serialization.

Properties are described only briefly in this section; more detailed descriptions are available in the Maspack Reference Manual and the overview paper.

The set of properties defined for a component is fixed for that component’s class; while property values may vary between component instances, their definitions are class-specific. Properties are exported by a class through code contained in the class definition, as described in Section 5.2.

1.4.1 Property handles and paths

Each property has a unique name which may be used to obtain a property handle through which the property’s value may be queried or set for a particular component. Property handles are implemented by the class Property and are returned by the component’s getProperty() method. getProperty() takes a property’s name and returns the corresponding handle. For example, components of type Muscle have a property excitation, for which a handle may be obtained using a code fragment such as

Property handles can also be obtained for sub-components, using a property path that consists of a path to the sub-component followed by a colon ‘:’ and the property name. For example, to obtain the excitation property for a sub-component located by axialSprings/lad relative to a MechModel, one could use a call of the form

1.4.2 Composite and inheritable properties

Composite properties are possible, in which a property value is a composite object that in turn has sub-properties. A good example of this is the RenderProps class, which is associated with the property renderProps for renderable objects and which itself can have a number of sub-properties such as visible, faceStyle, faceColor, lineStyle, lineColor, etc.

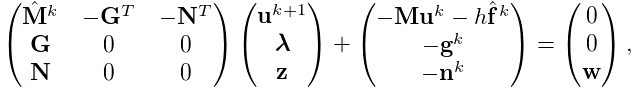

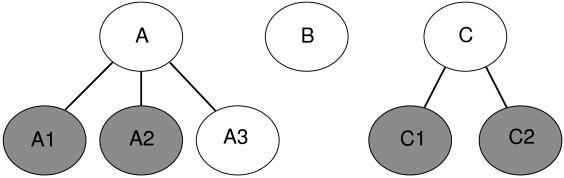

Properties can be declared to be inheritable, so that their values can be inherited from the same properties hosted by ancestor components further up the component hierarchy. Inheritable properties require a more elaborate declaration and are associated with a mode which may be either Explicit or Inherited. If a property’s mode is inherited, then its value is obtained from the closest ancestor exposing the same property whose mode is explicit. In Figure (1.1), the property stiffness is explicitly set in components A, C, and E, and inherited in B and D (which inherit from A) and F (which inherits from C).

1.5 Creating an application model

ArtiSynth applications are created by writing and compiling an application model that is a subclass of RootModel. This application-specific root model is then loaded and run by the ArtiSynth program.

The code for the application model should:

-

•

Declare a no-args constructor

-

•

Override the RootModel build() method to construct the application.

ArtiSynth can load a model either using the build method or by reading it from a file:

- Build method

-

ArtiSynth creates an instance of the model using the no-args constructor, assigns it a name (which is either user-specified or the simple name of the class), and then calls the build() method to perform the actual construction.

- Reading from a file

-

ArtiSynth creates an instance of the model using the no-args constructor, and then the model is named and constructed by reading the file.

The no-args constructor should perform whatever initialization is required in both cases, while the build() method takes the place of the file specification. Unless a model is originally created using a file specification (which is very tedious), the first time creation of a model will almost always entail using the build() method.

The general template for application model code looks like this:

Here, the model itself is called MyModel, and is defined in the (hypothetical) package artisynth.models.experimental (placing models in the super package artisynth.models is common practice but not necessary).

Note: The build() method was only introduced in ArtiSynth 3.1. Prior to that, application models were constructed using a constructor taking a String argument supplying the name of the model. This method of model construction still works but is deprecated.

1.5.1 Implementing the build() method

As mentioned above, the build() method is responsible for actual model construction. Many applications are built using a single top-level MechModel. Build methods for these may look like the following:

First, a MechModel is created (with the name "mech" in this example, although any name, or no name, may be given) and added to the list of models in the root model. Subsequent code then creates and adds the components required by the MechModel, as described in Sections 3, 4 and 6. The build() method also creates and adds to the root model any agents required by the application (controllers, probes, etc.), as described in Section 5.

When constructing a model, there is no fixed order in which components need to be added. For instance, in the above example, addModel(mech) could be called near the end of the build() method rather than at the beginning. The only restriction is that when a component is added to the hierarchy, all other components that it refers to should already have been added to the hierarchy. For instance, an axial spring (Section 3.1) refers to two points. When it is added to the hierarchy, those two points should already be present in the hierarchy.

The build() method supplies a String array as an argument, which can be used to transmit application arguments in a manner analogous to the args argument passed to static main() methods. In cases where the model is specified directly on the ArtiSynth command line (using the -model <classname> option), it is possible to also specify arguments for the build() method. This is done by enclosing the desired arguments within square brackets [ ] immediately following the -model option. So, for example,

> artisynth -model projects.MyModel [ -foo 123 ]

would cause the strings "-foo" and "123" to be passed to the build() method of MyModel.

1.5.2 Making models visible to ArtiSynth

In order to load an application model into ArtiSynth, the classes associated with its implementation must be made visible to ArtiSynth. This usually involves adding the top-level class directory associated with the application code to the classpath used by ArtiSynth.

The demonstration models referred to in this guide belong to the package artisynth.demos.tutorial and are already visible to ArtiSynth.

In most current ArtiSynth projects, classes are stored in a directory tree separate from the source code, with the top-level class directory named classes, located one level below the project root directory. A typical top-level class directory might be stored in a location like this:

/home/joeuser/artisynthProjects/classes

In the example shown in Section 1.5, the model was created in the package artisynth.models.experimental. Since Java classes are arranged in a directory structure that mirrors package names, with respect to the sample project directory shown above, the model class would be located in

/home/joeuser/artisynthProjects/classes/artisynth/models/experimental

At present there are three ways to make top-level class directories known to ArtiSynth:

- Add projects to your Eclipse launch configuration

-

If you are using the Eclipse IDE, then you can add the project in which are developing your model code to the launch configuration that you use to run ArtiSynth. Other IDEs will presumably provide similar functionality.

- Add the directories to the EXTCLASSPATH file

-

You can explicitly list class directories in the file EXTCLASSPATH, located in the ArtiSynth root directory (it may be necessary to create this file).

- Add the directories to your CLASSPATH environment variable

-

If you are running ArtiSynth from the command line, using the artisynth command (or artisynth.bat on Windows), then you can define a CLASSPATH environment variable in your environment and add the needed directories to this.

1.5.3 Loading and running a model

If a model’s classes are visible to ArtiSynth, then it may be loaded into ArtiSynth in several ways:

- Loading by class path

-

A model may be loaded by directly by choosing File > Load from class ... and directly specifying its class name. It is also possible to use the -model <classname> command line argument to have a model loaded directly into ArtiSynth when it starts up.

- Loading from the Models menu

-

A faster way to load a model is by selecting it in one of the Models submenus. This may require editing the model menu configuration files.

- Loading from a file

-

If a model has previously been saved to a file, it may be loaded from that file by choosing File > Load model ....

These methods are described in detail in the section ``Loading and Simulating Models'' of the ArtiSynth User Interface Guide.

The demonstration models referred to in this guide should already be present in the models menu and may be loaded from the submenu Models > All demos > tutorial.

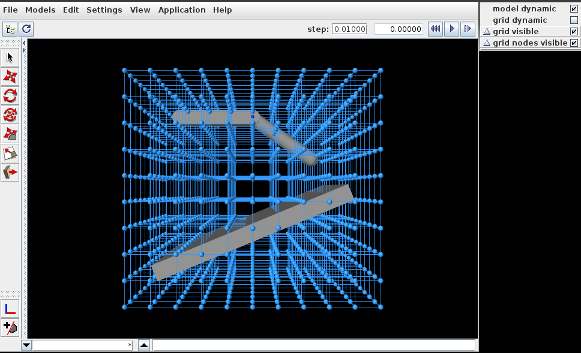

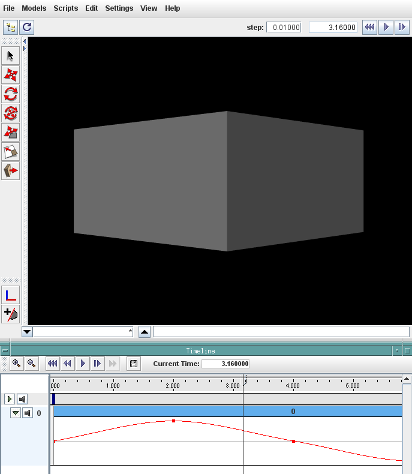

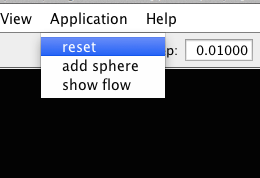

Once a model is loaded, it can be simulated, or run. Simulation of the model can then be started, paused, single-stepped, or reset using the play controls (Figure 1.2) located at the upper right of the ArtiSynth window frame.

Comprehensive information on exploring and interacting with models is given in the ArtiSynth User Interface Guide.

Chapter 2 Supporting classes

ArtiSynth uses a large number of supporting classes, mostly defined in the super package maspack, for handling mathematical and geometric quantities. Those that are referred to in this manual are summarized in this section.

2.1 Vectors and matrices

Among the most basic classes are those used to implement vectors and matrices, defined in maspack.matrix. All vector classes implement the interface Vector and all matrix classes implement Matrix, which provide a number of standard methods for setting and accessing values and reading and writing from I/O streams.

General sized vectors and matrices are implemented by VectorNd and MatrixNd. These provide all the usual methods for linear algebra operations such as addition, scaling, and multiplication:

As illustrated in the above example, vectors and matrices both provide a toString() method that allows their elements to be formated using a C-printf style format string. This is useful for providing concise and uniformly formatted output, particularly for diagnostics. The output from the above example is

result= 4.000 12.000 12.000 24.000 20.000

Detailed specifications for the format string are provided in the documentation for NumberFormat.set(String). If either no format string, or the string "%g", is specified, toString() formats all numbers using the full-precision output provided by Double.toString(value).

For computational efficiency, a number of fixed-size vectors and matrices are also provided. The most commonly used are those defined for three dimensions, including Vector3d and Matrix3d:

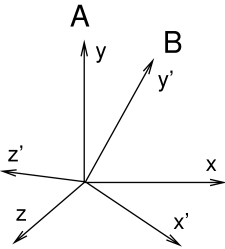

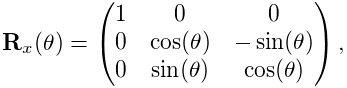

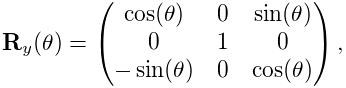

2.2 Rotations and transformations

maspack.matrix contains a number classes that implement rotation matrices, rigid transforms, and affine transforms.

Rotations (Section A.1) are commonly described using a RotationMatrix3d, which implements a rotation matrix and contains numerous methods for setting rotation values and transforming other quantities. Some of the more commonly used methods are:

Rotations can also be described by AxisAngle, which characterizes a rotation as a single rotation about a specific axis.

Rigid transforms (Section A.2) are used by ArtiSynth to describe a rigid body’s pose, as well as its relative position and orientation with respect to other bodies and coordinate frames. They are implemented by RigidTransform3d, which exposes its rotational and translational components directly through the fields R (a RotationMatrix3d) and p (a Vector3d). Rotational and translational values can be set and accessed directly through these fields. In addition, RigidTransform3d provides numerous methods, some of the more commonly used of which include:

Affine transforms (Section A.3) are used by ArtiSynth to effect scaling and shearing transformations on components. They are implemented by AffineTransform3d.

Rigid transformations are actually a specialized form of affine transformation in which the basic transform matrix equals a rotation. RigidTransform3d and AffineTransform3d hence both derive from the same base class AffineTransform3dBase.

2.3 Points and Vectors

The rotations and transforms described above can be used to transform both vectors and points in space.

Vectors are most commonly implemented using Vector3d, while points can be implemented using the subclass Point3d. The only difference between Vector3d and Point3d is that the former ignores the translational component of rigid and affine transforms; i.e., as described in Sections A.2 and A.3, a vector v has an implied homogeneous representation of

|

(2.1) |

while the representation for a point p is

|

(2.2) |

Both classes provide a number of methods for applying rotational and affine transforms. Those used for rotations are

where R is a rotation matrix and v1 is a vector (or a point in the case of Point3d).

The methods for applying rigid or affine transforms include:

where X is a rigid or affine transform. As described above, in the case of Vector3d, these methods ignore the translational part of the transform and apply only the matrix component (R for a RigidTransform3d and A for an AffineTransform3d). In particular, that means that for a RigidTransform3d given by X and a Vector3d given by v, the method calls

produce the same result.

2.4 Spatial vectors and inertias

The velocities, forces and inertias associated with 3D coordinate frames and rigid bodies are represented using the 6 DOF spatial quantities described in Sections A.5 and A.6. These are implemented by classes in the package maspack.spatialmotion.

Spatial velocities (or twists) are implemented by Twist, which exposes its translational and angular velocity components through the publicly accessible fields v and w, while spatial forces (or wrenches) are implemented by Wrench, which exposes its translational force and moment components through the publicly accessible fields f and m.

Both Twist and Wrench contain methods for algebraic operations such as addition and scaling. They also contain transform() methods for applying rotational and rigid transforms. The rotation methods simply transform each component by the supplied rotation matrix. The rigid transform methods, on the other hand, assume that the supplied argument represents a transform between two frames fixed within a rigid body, and transform the twist or wrench accordingly, using either (A.27) or (A.29).

The spatial inertia for a rigid body is implemented by SpatialInertia, which contains a number of methods for setting its value given various mass, center of mass, and inertia values, and querying the values of its components. It also contains methods for scaling and adding, transforming between coordinate systems, inversion, and multiplying by spatial vectors.

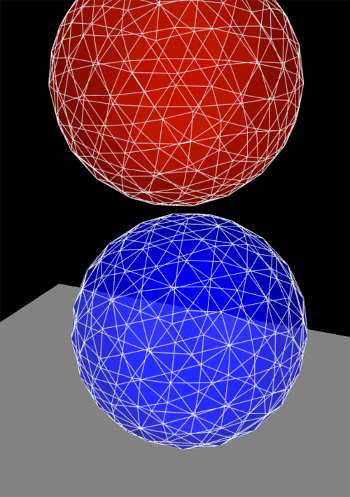

2.5 Meshes

ArtiSynth makes extensive use of 3D meshes, which are defined in maspack.geometry. They are used for a variety of purposes, including visualization, collision detection, and computing physical properties (such as inertia or stiffness variation within a finite element model).

A mesh is essentially a collection of vertices (i.e., points) that are topologically connected in some way. All meshes extend the abstract base class MeshBase, which supports the vertex definitions, while subclasses provide the topology.

Through MeshBase, all meshes provide methods for adding and accessing vertices. Some of these include:

Vertices are implemented by Vertex3d, which defines the position of the vertex (returned by the method getPosition()), and also contains support for topological connections. In addition, each vertex maintains an index, obtainable via getIndex(), that equals the index of its location within the mesh’s vertex list. This makes it easy to set up parallel array structures for augmenting mesh vertex properties.

Mesh subclasses currently include:

- PolygonalMesh

-

Implements a 2D surface mesh containing faces implemented using half-edges.

- PolylineMesh

-

Implements a mesh consisting of connected line-segments (polylines).

- PointMesh

-

Implements a point cloud with no topological connectivity.

PolygonalMesh is used quite extensively and provides a number of methods for implementing faces, including:

The class Face implements a face as a counter-clockwise arrangement of vertices linked together by half-edges (class HalfEdge). Face also supplies a face’s (outward facing) normal via getNormal().

Some mesh uses within ArtiSynth, such as collision detection, require a triangular mesh; i.e., one where all faces have three vertices. The method isTriangular() can be used to check for this. Meshes that are not triangular can be made triangular using triangulate().

2.5.1 Mesh creation

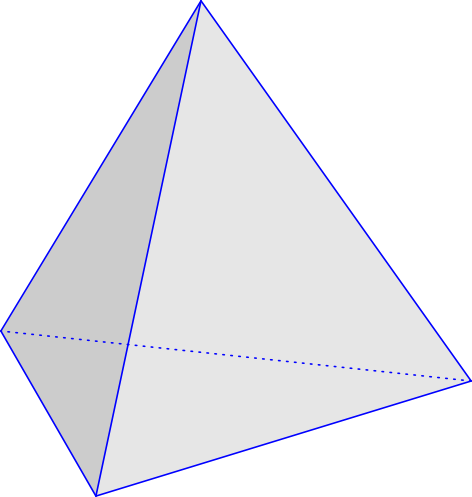

It is possible to create a mesh by direct construction. For example, the following code fragment creates a simple closed tetrahedral surface:

However, meshes are more commonly created using either one of the factory methods supplied by MeshFactory, or by reading a definition from a file (Section 2.5.5).

Some of the more commonly used factory methods for creating polyhedral meshes include:

Each factory method creates a mesh in some standard coordinate

frame. After creation, the mesh can be transformed using the

transform(X) method, where X is either a rigid transform (

RigidTransform3d) or a more general affine

transform (AffineTransform3d).

For example, to create a rotated box centered on ![]() ,

one could do:

,

one could do:

One can also scale a mesh using scale(s), where s is a single scale factor, or scale(sx,sy,sz), where sx, sy, and sz are separate scale factors for the x, y and z axes. This provides a useful way to create an ellipsoid:

MeshFactory can also be used to create new meshes by performing boolean operations on existing ones:

2.5.2 Setting normals, colors, and textures

Meshes provide support for adding normal, color, and texture information, with the exact interpretation of these quantities depending upon the particular mesh subclass. Most commonly this information is used simply for rendering, but in some cases normal information might also be used for physical simulation.

For polygonal meshes, the normal information described here is used only for smooth shading. When flat shading is requested, normals are determined directly from the faces themselves.

Normal information can be set and queried using the following methods:

The method setNormals() takes two arguments: a set of normal vectors (nrmls), along with a set of index values (indices) that map these normals onto the vertices of each of the mesh’s geometric features. Often, there will be one unique normal per vertex, in which case nrmls will have a size equal to the number of vertices, but this is not always the case, as described below. Features for the different mesh subclasses are: faces for PolygonalMesh, polylines for PolylineMesh, and vertices for PointMesh. If indices is specified as null, then normals is assumed to have a size equal to the number of vertices, and an appropriate index set is created automatically using createVertexIndices() (described below). Otherwise, indices should have a size of equal to the number of features times the number of vertices per feature. For example, consider a PolygonalMesh consisting of two triangles formed from vertex indices (0, 1, 2) and (2, 1, 3), respectively. If normals are specified and there is one unique normal per vertex, then the normal indices are likely to be

[ 0 1 2 2 1 3 ]

As mentioned above, sometimes there may be more than one normal per vertex. This happens in cases when the same vertex uses different normals for different faces. In such situations, the size of the nrmls argument will exceed the number of vertices.

The method setNormals() makes internal copies of the specified normal and index information, and this information can be later read back using getNormals() and getNormalIndices(). The number of normals can be queried using numNormals(), and individual normals can be queried or set using getNormal(idx) and setNormal(idx,nrml). All normals and indices can be explicitly cleared using clearNormals().

Color and texture information can be set using analagous methods. For colors, we have

When specified as float[], colors are given as RGB or

RGBA values, in the range ![]() , with array lengths of 3 and 4,

respectively. The colors returned by

getColors() are always RGBA

values.

, with array lengths of 3 and 4,

respectively. The colors returned by

getColors() are always RGBA

values.

With colors, there may often be fewer colors than the number of vertices. For instance, we may have only two colors, indexed by 0 and 1, and want to use these to alternately color the mesh faces. Using the two-triangle example above, the color indices might then look like this:

[ 0 0 0 1 1 1 ]

Finally, for texture coordinates, we have

When specifying indices using setNormals, setColors, or setTextureCoords, it is common to use the same index set as that which associates vertices with features. For convenience, this index set can be created automatically using

Alternatively, we may sometimes want to create a index set that assigns the same attribute to each feature vertex. If there is one attribute per feature, the resulting index set is called a feature index set, and can be created using

If we have a mesh with three triangles and one color per triangle, the resulting feature index set would be

[ 0 0 0 1 1 1 2 2 2 ]

Note: when a mesh is modified by the addition of new features (such as faces for PolygonalMesh), all normal, color and texture information is cleared by default (with normal information being automatically recomputed on demand if automatic normal creation is enabled; see Section 2.5.3). When a mesh is modified by the removal of features, the index sets for normals, colors and textures are adjusted to account for the removal.

For colors, it is possible to request that a mesh explicitly maintain colors for either its vertices or features (Section 2.5.4). When this is done, colors will persist when vertices or features are added or removed, with default colors being automatically created as necessary.

Once normals, colors, or textures have been set, one may want to know which of these attributes are associated with the vertices of a specific feature. To know this, it is necessary to find that feature’s offset into the attribute’s index set. This offset information can be found using the array returned by

For example, the three normals associated with a triangle at index ti can be obtained using

Alternatively, one may use the convenience methods

which return the attribute values for the ![]() -th vertex of

the feature indexed by fidx.

-th vertex of

the feature indexed by fidx.

In general, the various get methods return references to internal storage information and so should not be modified. However, specific values within the lists returned by getNormals(), getColors(), or getTextureCoords() may be modified by the application. This may be necessary when attribute information changes as the simultion proceeds. Alternatively, one may use methods such as setNormal(idx,nrml) setColor(idx,color), or setTextureCoords(idx,coords).

Also, in some situations, particularly with colors and textures, it may be desirable to not have color or texture information defined for certain features. In such cases, the corresponding index information can be specified as -1, and the getNormal(), getColor() and getTexture() methods will return null for the features in question.

2.5.3 Automatic creation of normals and hard edges

For some mesh subclasses, if normals are not explicitly set, they are computed automatically whenever getNormals() or getNormalIndices() is called. Whether or not this is true for a particular mesh can be queried by the method

Setting normals explicitly, using a call to setNormals(nrmls,indices), will overwrite any existing normal information, automatically computed or otherwise. The method

will return true if normals have been explicitly set, and false if they have been automatically computed or if there is currently no normal information. To explicitly remove normals from a mesh which has automatic normal generation, one may call setNormals() with the nrmls argument set to null.

More detailed control over how normals are automatically created may be available for specific mesh subclasses. For example, PolygonalMesh allows normals to be created with multiple normals per vertex, for vertices that are associated with either open or hard edges. This ability can be controlled using the methods

Having multiple normals means that even with smooth shading, open or hard edges will still appear sharp. To make an edge hard within a PolygonalMesh, one may use the methods

which control the hardness of edges between individual vertices, specified either directly or using their indices.

2.5.4 Vertex and feature coloring

The method setColors() makes it possible to assign any desired coloring scheme to a mesh. However, it does require that the user explicity reset the color information whenever new features are added.

For convenience, an application can also request that a mesh explicitly maintain colors for either its vertices or features. These colors will then be maintained when vertices or features are added or removed, with default colors being automatically created as necessary.

Vertex-based coloring can be requested with the method

This will create a separate (default) color for each of the mesh’s vertices, and set the color indices to be equal to the vertex indices, which is equivalent to the call

where colors contains a default color for each vertex. However, once vertex coloring is enabled, the color and index sets will be updated whenever vertices or features are added or removed. Meanwhile, applications can query or set the colors for any vertex using getColor(idx), or any of the various setColor methods. Whether or not vertex coloring is enabled can be queried using

Once vertex coloring is established, the application will typically want to set the colors for all vertices, perhaps using a code fragment like this:

Similarly, feature-based coloring can be requested using the method

This will create a separate (default) color for each of the mesh’s features (faces for PolygonalMesh, polylines for PolylineMesh, etc.), and set the color indices to equal the feature index set, which is equivalent to the call

where colors contains a default color for each feature. Applications can query or set the colors for any vertex using getColor(idx), or any of the various setColor methods. Whether or not feature coloring is enabled can be queried using

2.5.5 Reading and writing mesh files

The package maspack.geometry.io supplies a number of classes for writing and reading meshes to and from files of different formats.

Some of the supported formats and their associated readers and writers include:

| Extension | Format | Reader/writer classes |

|---|---|---|

| .obj | Alias Wavefront | WavefrontReader, WavefrontWriter |

| .ply | Polygon file format | PlyReader, PlyWriter |

| .stl | STereoLithography | StlReader, StlWriter |

| .gts | GNU triangulated surface | GtsReader, GtsWriter |

| .off | Object file format | OffReader, OffWriter |

The general usage pattern for these classes is to construct the desired reader or writer with a path to the desired file, and then call readMesh() or writeMesh() as appropriate:

Both readMesh() and writeMesh() may throw I/O exceptions, which must be either caught, as in the example above, or thrown out of the calling routine.

For convenience, one can also use the classes GenericMeshReader or GenericMeshWriter, which internally create an appropriate reader or writer based on the file extension. This enables the writing of code that does not depend on the file format:

Here, fileName can refer to a mesh of any format supported by GenericMeshReader. Note that the mesh returned by readMesh() is explicitly cast to PolygonalMesh. This is because readMesh() returns the superclass MeshBase, since the default mesh created for some file formats may be different from PolygonalMesh.

2.5.6 Reading and writing normal and texture information

When writing a mesh out to a file, normal and texture information are also written if they have been explicitly set and the file format supports it. In addition, by default, automatically generated normal information will also be written if it relies on information (such as hard edges) that can’t be reconstructed from the stored file information.

Whether or not normal information will be written is returned by the method

This will always return true if any of the conditions described above have been met. So for example, if a PolygonalMesh contains hard edges, and multiple automatic normals are enabled (i.e., getMultipleAutoNormals() returns true), then getWriteNormals() will return true.

Default normal writing behavior can be overridden within the MeshWriter classes using the following methods:

where enable should be one of the following values:

- 0

-

normals will never be written;

- 1

-

normals will always be written;

- -1

-

normals will written according to the default behavior described above.

When reading a PolygonalMesh from a file, if the file contains normal information with multiple normals per vertex that suggests the existence of hard edges, then the corresponding edges are set to be hard within the mesh.

Chapter 3 Mechanical Models I

This section details how to build basic multibody-type mechanical models consisting of particles, springs, rigid bodies, joints, and other constraints.

3.1 Springs and particles

The most basic type of mechanical model consists simply of particles connected together by axial springs. Particles are implemented by the class Particle, which is a dynamic component containing a three-dimensional position state, a corresponding velocity state, and a mass. It is an instance of the more general base class Point, which is used to also implement spatial points such as markers which do not have a mass.

3.1.1 Axial springs and materials

An axial spring is a simple spring that connects two points and is

implemented by the class

AxialSpring. This is a force effector component that exerts equal and opposite forces on the

two points, along the line separating them, with a magnitude ![]() that

is a function

that

is a function ![]() of the distance

of the distance ![]() between the points,

and the distance derivative

between the points,

and the distance derivative ![]() .

.

Each axial spring is associated with an axial material,

implemented by a subclass of

AxialMaterial, that specifies

the function ![]() . The most basic type of axial material is

a LinearAxialMaterial, which

determines

. The most basic type of axial material is

a LinearAxialMaterial, which

determines ![]() according to the linear relationship

according to the linear relationship

| (3.1) |

where ![]() is the rest length and

is the rest length and ![]() and

and ![]() are the stiffness and

damping terms. Both

are the stiffness and

damping terms. Both ![]() and

and ![]() are properties of the material, while

are properties of the material, while

![]() is a property of the spring.

is a property of the spring.

Axial springs are assigned a linear axial material by default. More complex, non-linear axial materials may be defined in the package artisynth.core.materials. Setting or querying a spring’s material may be done with the methods setMaterial() and getMaterial().

3.1.2 Example: A simple particle-spring model

An complete application model that implements a simple particle-spring model is given below.

Line 1 of the source defines the package in which the model class will reside, in this case artisynth.demos.tutorial. Lines 3-8 import definitions for other classes that will be used.

The model application class is named ParticleSpring and declared to extend RootModel (line 13), and the build() method definition begins at line 15. (A no-args constructor is also needed, but because no other constructors are defined, the compiler creates one automatically.)

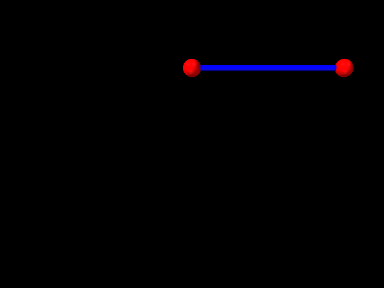

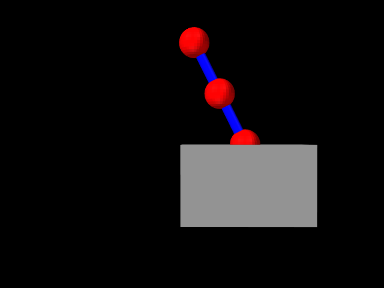

To begin, the build() method creates a MechModel named "mech", and then adds it to the models list of the root model using the addModel() method (lines 18-19). Next, two particles, p1 and p2, are created, with masses equal to 2 and initial positions at 0, 0, 0, and 1, 0, 0, respectively (lines 22-23). Then an axial spring is created, with end points set to p1 and p2, and assigned a linear material with a stiffness and damping of 20 and 10 (lines 24-27). Finally, after the particles and the spring are created, they are added to the particles and axialSprings lists of the MechModel using the methods addParticle() and addAxialSpring() (lines 30-32).

At this point in the code, both particles are defined to be

dynamically controlled, so that running the simulation would cause

both to fall under the MechModel’s default gravity acceleration

of ![]() . However, for this example, we want the first

particle to remain fixed in place, so we set it to be non-dynamic (line 34), meaning that the physical simulation will not

update its position in response to forces (Section

3.1.3).

. However, for this example, we want the first

particle to remain fixed in place, so we set it to be non-dynamic (line 34), meaning that the physical simulation will not

update its position in response to forces (Section

3.1.3).

The remaining calls control aspects of how the model is graphically rendered. setBounds() (line 37) increases the model’s ``bounding box'' so that by default it will occupy a larger part of the viewer frustum. The covenience method RenderProps.setSphericalPoints() is used to set points p1 and p2 to render as solid red spheres with a radius of 0.06, while RenderProps.setCylindricalLines() is used to set spring to render as a solid blue cylinder with a radius of 0.02. More details about setting render properties are given in Section 4.3.

3.1.3 Dynamic, parametric, and attached components

By default, a dynamic component is advanced through time in response to the forces applied to it. However, it is also possible to set a dynamic component’s dynamic property to false, so that it does not respond to force inputs. As shown in the example above, this can be done using the method setDynamic():

comp.setDynamic (false);

The method isDynamic() can be used to query the dynamic property.

Dynamic components can also be attached to other dynamic components (as mentioned in Section 1.2) so that their positions and velocities are controlled by the master components that they are attached to. To attach a dynamic component, one creates an AttachmentComponent specifying the attachment connection and adds it to the MechModel, as described in Section 3.5. The method isAttached() can be used to determine if a component is attached, and if it is, getAttachment() can be used to find the corresponding AttachmentComponent.

Overall, a dynamic component can be in one of three states:

- active

-

Component is dynamic and unattached. The method isActive() returns true. The component will move in response to forces.

- parametric

-

Component is not dynamic, and is unattached. The method isParametric() returns true. The component will either remain fixed, or will move around in response to external inputs specifying the component’s position and/or velocity. One way to supply such inputs is to use controllers or input probes, as described in Section 5.

- attached

-

Component is attached. The method isAttached() returns true. The component will move so as to follow the other master component(s) to which it is attached.

3.1.4 Custom axial materials

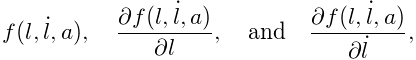

Application authors may create their own axial materials by subclassing AxialMaterial and overriding the functions

where excitation is an additional excitation signal ![]() , which

is used to implement active springs and which in particular is used to

implement axial muscles (Section 4.4), for

which

, which

is used to implement active springs and which in particular is used to

implement axial muscles (Section 4.4), for

which ![]() is usually in the range

is usually in the range ![]() .

.

The first three methods should return the values of

|

(3.2) |

respectively, while the last method should return true if

![]() ; i.e., if it is

always equals to 0.

; i.e., if it is

always equals to 0.

3.1.5 Damping parameters

Mechanical models usually contain damping forces in addition to spring-type restorative forces. Damping generates forces that reduce dynamic component velocities, and is usually the major source of energy dissipation in the model. Damping forces can be generated by the spring components themselves, as described above.

A general damping can be set for all particles by setting the MechModel’s pointDamping property. This causes a force

| (3.3) |

to be applied to all particles, where ![]() is the value of the pointDamping and

is the value of the pointDamping and ![]() is the particle’s velocity.

is the particle’s velocity.

pointDamping can be set and queried using the MechModel methods

In general, whenever a component has a property propX, that property can be set and queried in code using methods of the form

setPropX (T d); T getPropX();where T is the type associated with the property.

pointDamping can also be set for particles individually. This property is inherited (Section 1.4.2), so that if not set explicitly, it inherits the nearest explicitly set value in an ancestor component.

3.2 Rigid bodies

Rigid bodies are implemented in ArtiSynth by the class RigidBody, which is a dynamic component containing a six-dimensional position and orientation state, a corresponding velocity state, an inertia, and an optional surface mesh.

A rigid body is associated with its own 3D spatial coordinate frame, and is a subclass of the more general Frame component. The combined position and orientation of this frame with respect to world coordinates defines the body’s pose, and the associated 6 degrees of freedom describe its ``position'' state.

3.2.1 Frame markers

ArtiSynth makes extensive use of markers, which are (massless) points attached to dynamic components in the model. Markers are used for graphical display, implementing attachments, and transmitting forces back onto the underlying dynamic components.

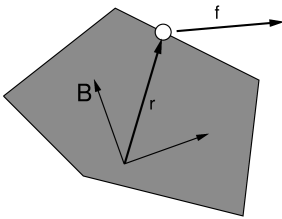

A frame marker is a marker that can be attached to a Frame, and most commonly to a RigidBody (Figure 3.2). They are frequently used to provide the anchor points for attaching springs and, more generally, applying forces to the body.

Frame markers are implemented by the class FrameMarker, which is a subclass of Point. The methods

get and set the marker’s location ![]() with respect to the frame’s

coordinate system. When a 3D force

with respect to the frame’s

coordinate system. When a 3D force ![]() is applied to the marker, it

generates a spatial force

is applied to the marker, it

generates a spatial force ![]() (Section

A.5) on the frame given by

(Section

A.5) on the frame given by

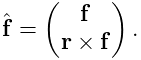

|

(3.4) |

3.2.2 Example: A simple rigid body-spring model

A simple rigid body-spring model is defined in

artisynth.demos.tutorial.RigidBodySpring

This differs from ParticleSpring only in the build() method, which is listed below:

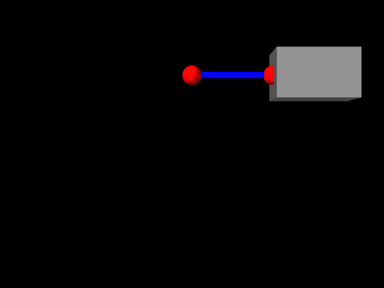

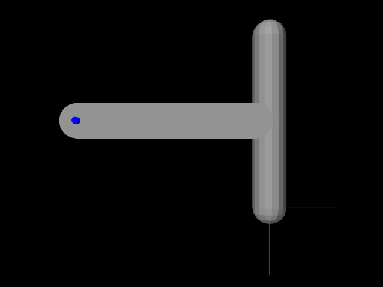

The differences from ParticleSpring begin

at line 9. Instead of creating a second particle, a rigid body is

created using the factory method

RigidBody.createBox(), which

takes x, y, z widths and a (uniform) density and creates a box-shaped

rigid body complete with surface mesh and appropriate mass and

inertia. As the box is initially centered at the origin, moving it

elsewhere requires setting the body’s pose, which is done using setPose(). The RigidTransform3d passed to setPose() is

created using a three-argument constructor that generates a

translation-only transform. Next, starting at line 14, a FrameMarker is created for a location ![]() relative to the

rigid body, and attached to the body using its setFrame()

method.

relative to the

rigid body, and attached to the body using its setFrame()

method.

The remainder of build() is the same as for ParticleSpring, except that the spring is attached to the frame marker instead of a second particle.

3.2.3 Creating rigid bodies

As illustrated above, rigid bodies can be created using factory methods supplied by RigidBody. Some of these include:

The bodies do not need to be named; if no name is desired, then name and can be specified as null.

In addition, there are also factory methods for creating a rigid body directly from a mesh:

These take either a polygonal mesh (Section 2.5), or a file name from which a mesh is read, and use it as the body’s surface mesh and then compute the mass and inertia properties from the specified (uniform) density.

Alternatively, one can create a rigid body directly from a constructor, and then set the mesh and inertia properties explicitly:

3.2.4 Pose and velocity

A body’s pose can be set and queried using the methods

These use a RigidTransform3d (Section

2.2) to describe the pose. Body poses are

described in world coordinates and specify the transform from body to

world coordinates. In particular, the pose for a body A specifies

the rigid transform ![]() .

.

Rigid bodies also expose the translational and rotational components of their pose via the properties position and orientation, which can be queried and set independently using the methods

The velocity of a rigid body is described using a Twist (Section 2.4), which contains both the translational and rotational velocities. The following methods set and query the spatial velocity as described with respect to world coordinates:

During simulation, unless a rigid body has been set to be parametric (Section 3.1.3), its pose and velocity are updated in response to forces, so setting the pose or velocity generally makes sense only for setting initial conditions. On the other hand, if a rigid body is parametric, then it is possible to control its pose during the simulation, but in that case it is better to set its target pose and/or target velocity, as described in Section 5.3.1.

3.2.5 Inertia and meshes

The ``mass'' of a rigid body is described by its spatial inertia (Section A.6), implemented by a SpatialInertia object, which specifies its mass, center of mass, and rotational inertia with respect to the center of mass.

Most rigid bodies are also associated with a polygonal surface mesh, which can be set and queried using the methods

The second method takes an optional fileName argument that can be set to the name of a file from which the mesh was read. Then if the model itself is saved to a file, the model file will specify the mesh using the file name instead of explicit vertex and face information, which can reduce the model file size considerably.

The inertia of a rigid body can be explicitly set using a variety of methods including

and can be queried using

In practice, it is often more convenient to simply specify a mass or a density, and then use the volume defined by the surface mesh to compute the remaining inertial values. How a rigid body’s inertia is computed is determined by its inertiaMethod property, which can be one

- Density

-

Inertia is computed from density;

- Mass

-

Inertia is computed from mass;

- Explicit

-

Inertia is set explicitly.

This property can be set and queried using

and its default value is Density. Explicitly setting the inertia using one of setInertia() methods described above will set inertiaMethod to Explicit. The method

will (re)compute the inertia using the mesh and a density value and set inertiaMethod to Density, and the method

will (re)compute the inertia using the mesh and mass value and set inertiaMethod to Mass.

Finally, the (assumed uniform) density of the body can be queried using

3.2.6 Damping parameters

As with particles, it is possible to set damping parameters for rigid bodies.

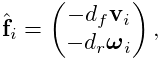

MechModel provides two properties, frameDamping and rotaryDamping, which generate a spatial force centered on each rigid body’s coordinate frame

|

(3.5) |

where ![]() and

and ![]() are the frameDamping and rotaryDamping values, and

are the frameDamping and rotaryDamping values, and ![]() and

and ![]() are the translational

and angular velocity of the body’s coordinate frame.

The damping parameters can be set and queried using the MechModel

methods

are the translational

and angular velocity of the body’s coordinate frame.

The damping parameters can be set and queried using the MechModel

methods

For models involving rigid bodies, it is often necessary to set rotaryDamping to a non-zero value because frameDamping will provide no damping at all when a rigid body is simply rotating about its coordinate frame origin.

Frame and rotary damping can also be set for individual bodies using their own (inherited) frameDamping and rotaryDamping properties.

3.3 Joints and connectors

In a typical mechanical model, many of the rigid bodies are interconnected, either using spring-type components that exert binding forces on the bodies, or through joint-type connectors that enforce the connection using hard constraints.

3.3.1 Joints and coordinate frames

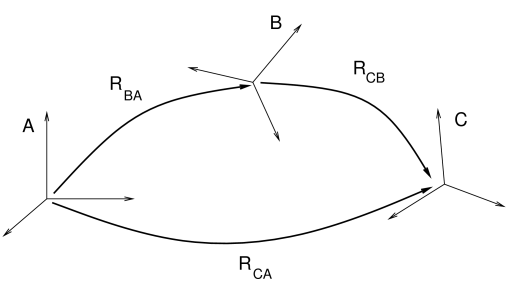

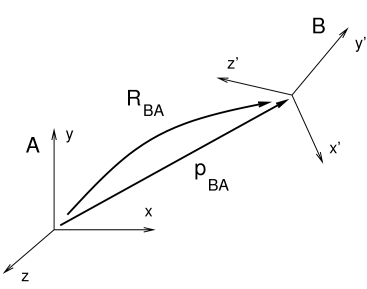

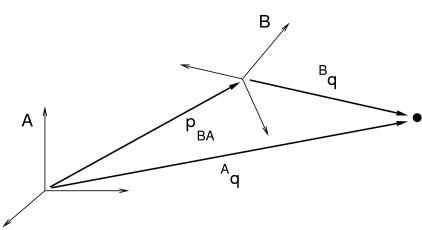

Consider two bodies A and B. The pose of body B with respect to body A

can be described by the 6 DOF rigid transform ![]() . If bodies A

and B are unconnected,

. If bodies A

and B are unconnected, ![]() may assume any possible value

and has a full six degrees of freedom. A joint between A and B

restricts the set of poses that are possible between the two bodies

and reduces the degrees of freedom available to

may assume any possible value

and has a full six degrees of freedom. A joint between A and B

restricts the set of poses that are possible between the two bodies

and reduces the degrees of freedom available to ![]() . For

simplicity, joints have their own coordinate frames for describing

their constraining actions, and then these frames are related to the

frames A and B of the associated bodies by auxiliary transformations.

. For

simplicity, joints have their own coordinate frames for describing

their constraining actions, and then these frames are related to the

frames A and B of the associated bodies by auxiliary transformations.

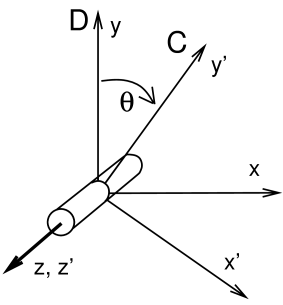

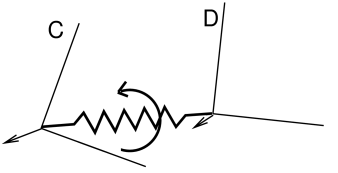

Each joint is associated with two coordinate frames C and D which move

with respect to each other as the joint moves. The allowed joint

motions therefore correspond to the allowed values of the joint transform

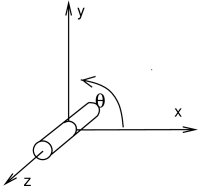

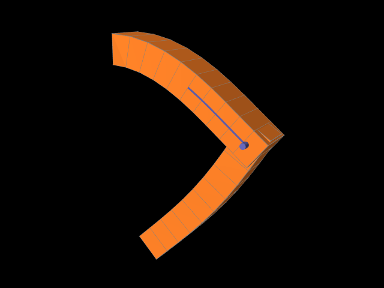

![]() . D is the base frame and C is the motion

frame. For a revolute joint (Figure 3.4), C can

move with respect to D by rotating about the z axis. Other motions are

prohibited.

. D is the base frame and C is the motion

frame. For a revolute joint (Figure 3.4), C can

move with respect to D by rotating about the z axis. Other motions are

prohibited. ![]() should therefore alway have the form

should therefore alway have the form

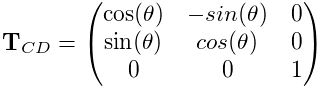

|

(3.6) |

where ![]() is the angle of joint rotation and is known as the joint parameter. Other joints have different parameterizations, with

the number of parameters equaling the number of degrees of freedom

available to the joint. When

is the angle of joint rotation and is known as the joint parameter. Other joints have different parameterizations, with

the number of parameters equaling the number of degrees of freedom

available to the joint. When ![]() (where

(where ![]() is the

identity transform), the parameters are all (usually) equal to zero,

and the joint is said to be in the zero state.

is the

identity transform), the parameters are all (usually) equal to zero,

and the joint is said to be in the zero state.

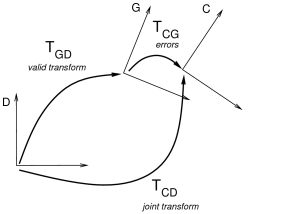

In practice, due to numerical errors and/or compliance in the joint,

the joint transform ![]() may

sometimes deviate from the allowed set of values dictated by the joint

type. In ArtiSynth, this is accounted for by introducing an additional

constraint frame G between D and C (Figure 3.5).

G is computed to be the nearest frame to C that lies exactly

in the joint constraint space.

may

sometimes deviate from the allowed set of values dictated by the joint

type. In ArtiSynth, this is accounted for by introducing an additional

constraint frame G between D and C (Figure 3.5).

G is computed to be the nearest frame to C that lies exactly

in the joint constraint space. ![]() is therefore a

valid transform for the joint,

is therefore a

valid transform for the joint, ![]() accommodates the error,

and the whole joint transform is given by the composition

accommodates the error,

and the whole joint transform is given by the composition

| (3.7) |

If there is no compliance or joint error, then frames G and C are the

same, ![]() , and

, and ![]() .

.

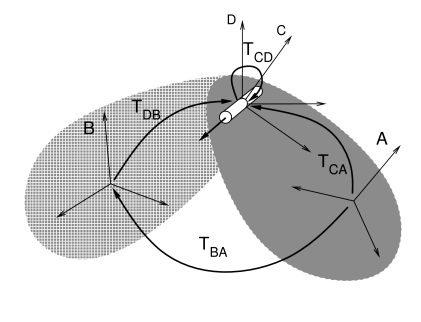

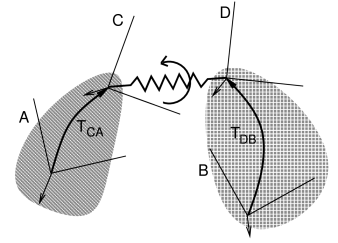

In general, each joint is attached to two rigid bodies A and B, with

frame C being fixed to body A and frame D being fixed to body B. The

restrictions of the joint transform ![]() therefore restrict the

relative poses of A and B.

therefore restrict the

relative poses of A and B.

Except in special cases, the joint coordinate frames C and D are not

coincident with the body frames A and B. Instead, they are located

relative to A and B by the transforms ![]() and

and ![]() ,

respectively (Figure 3.6).

Since

,

respectively (Figure 3.6).

Since ![]() and

and ![]() are both fixed, the pose

of B relative to A can be determined from

the joint transform

are both fixed, the pose

of B relative to A can be determined from

the joint transform ![]() :

:

| (3.8) |

(See Section A.2 for a discussion of determining transforms between related coordinate frames).

3.3.2 Creating Joints

Joint components in ArtiSynth are implemented by subclasses of BodyConnector. Some of the commonly used ones are described in Section 3.3.4.

An application creates a joint by constructing it and adding it to a MechModel. Most joints generally have a constructor of the form

which specifies the rigid bodies A and B which the joint connects,

along with the transform ![]() giving the pose of the joint base

frame D in world coordinates. Then constructor then assumes that the

joint is in the zero state, so that C and D are the same and

giving the pose of the joint base

frame D in world coordinates. Then constructor then assumes that the

joint is in the zero state, so that C and D are the same and

![]() and

and ![]() , and then computes

, and then computes

![]() and

and ![]() from

from

| (3.9) | ||||

| (3.10) |

where ![]() and

and ![]() are the poses of A and B.

The same body and transform settings can be made on an existing

joint using the method

setBodies(bodyA, bodyB, TDW).

are the poses of A and B.

The same body and transform settings can be made on an existing

joint using the method

setBodies(bodyA, bodyB, TDW).

Alternatively, if we prefer to explicitly specify ![]() or

or ![]() , then we

can determine

, then we

can determine ![]() from

from ![]() or

or ![]() using

using

| (3.11) | ||||

| (3.12) |

For example, if we know ![]() , this can be accomplished using

the following code fragment:

, this can be accomplished using

the following code fragment:

Another method,

setBodies(bodyA, TCA, bodyB, TDB), allows us to set both values of

![]() or

or ![]() explicitly. This is useful if the joint

transform

explicitly. This is useful if the joint

transform ![]() is known to be some value other than the

identity, in which case

is known to be some value other than the

identity, in which case ![]() or

or ![]() can be computed

from (3.8), where

can be computed

from (3.8), where ![]() is given by

is given by

| (3.13) |

For instance, if we know ![]() and the joint transform

and the joint transform ![]() ,

then we can compute

,

then we can compute ![]() from

from

| (3.14) |

and set up the joint as follows:

Some joint implementations provide the ability to explicitly set the

joint parameter(s) after it has been created and added to the MechModel, making it easy to ``move'' the joint to a specific

configuration. For example, RevoluteJoint provides the method

setTheta().

This causes the transform ![]() to be explicitly set to the value

implied by the joint parameters, and the pose of either body A or B is

changed to accommodate this. Whether body A or B is moved depends on

which one is the least connected to ``ground'', and other bodies that have

joint connections to the moved body are moved as well.

to be explicitly set to the value

implied by the joint parameters, and the pose of either body A or B is

changed to accommodate this. Whether body A or B is moved depends on

which one is the least connected to ``ground'', and other bodies that have

joint connections to the moved body are moved as well.

If desired, joints can be connected to only a single rigid body. In this case, the second body B is simply assumed to be ``ground'', and the coordinate frame B is instead taken to be the world coordinate frame W. The corresponding calls to the joint constructor or setBodies() take the form

or

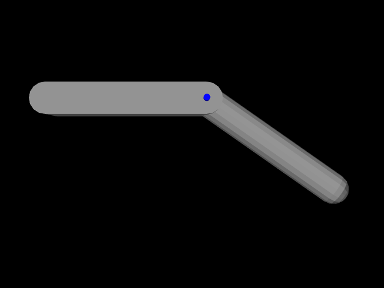

3.3.3 Example: A simple revolute joint

A simple model showing two rigid bodies connected by a joint is defined in

artisynth.demos.tutorial.RigidBodyJoint

The build method for this model is given below:

A MechModel is created as usual at line 4. However, in this

example, we also set some parameters for it:

setGravity() is

used to set the gravity acceleration vector to ![]() instead

of the default value of

instead

of the default value of ![]() , and the frameDamping

and rotaryDamping properties (Section

3.2.6) are set to provide appropriate damping.

, and the frameDamping

and rotaryDamping properties (Section

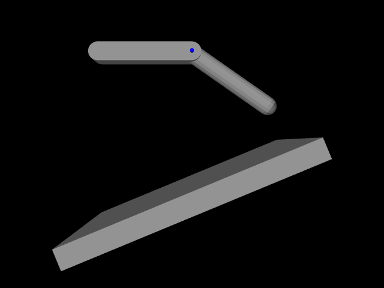

3.2.6) are set to provide appropriate damping.

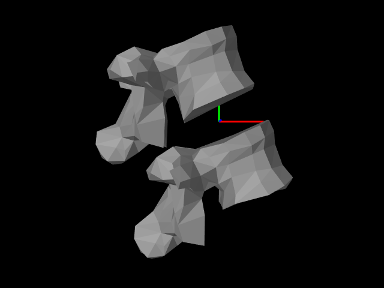

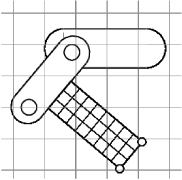

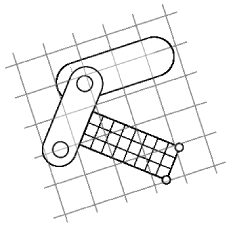

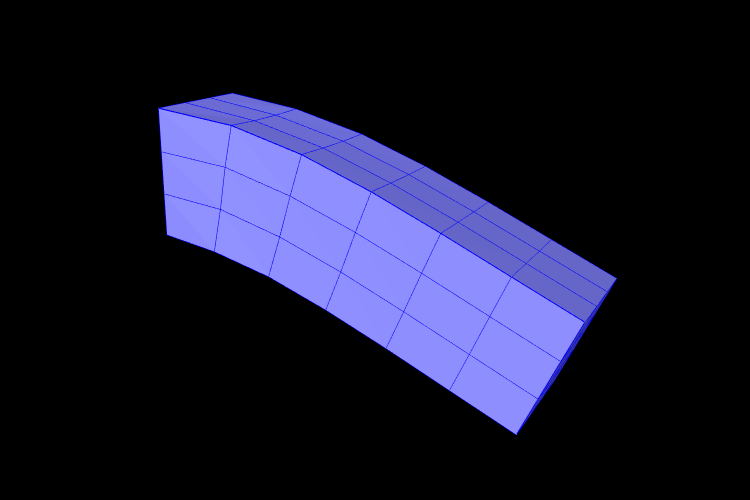

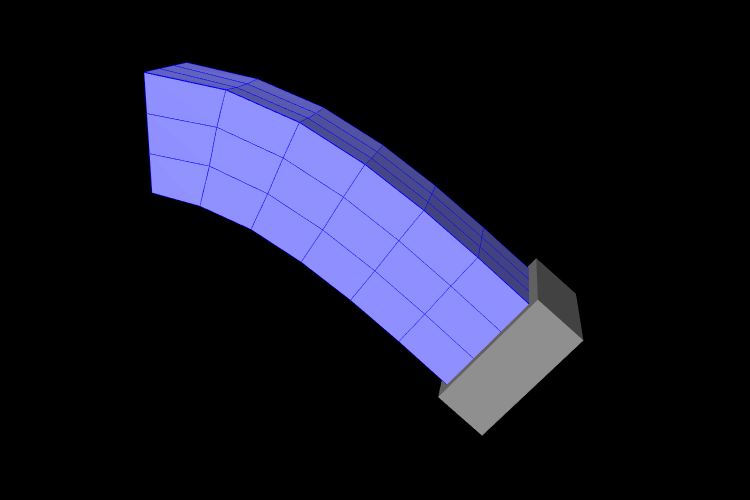

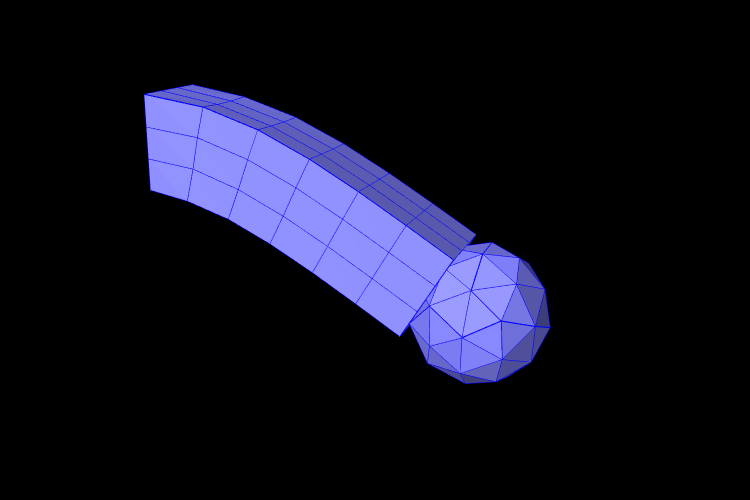

Each of the two rigid bodies are created from a mesh and a density. The meshes themselves are created using the factory methods MeshFactory.createRoundedBox() and MeshFactory.createRoundedCylinder() (lines 13 and 22), and then RigidBody.createFromMesh() is used to turn these into rigid bodies with a density of 0.2 (lines 17 and 25). The pose of the two bodies is set using RigidTransform3d objects created with x, y, z translation and axis-angle orientation values (lines 18 and 26).

The revolute joint is implemented using RevoluteJoint, which is constructed at line 32 with the joint coordinate frame D being located in world coordinates by TDW as described in Section 3.3.2.

Once the joint is created and added to the MechModel, the method

setTheta() is

used to explicitly set the joint parameter to 35 degrees. The joint

transform ![]() is then set appropriately and bodyA is moved

to accommodate this (bodyA being chosen since it is the freest

to move).

is then set appropriately and bodyA is moved

to accommodate this (bodyA being chosen since it is the freest

to move).

Finally, render properties are set starting at line 42. A revolute joint is rendered as a line segment, using the line render properties (Section 4.3), with lineStyle and lineColor set to Cylinder and BLUE, respectively, by default. The cylinder radius and length are specified by the line render property lineRadius and the revolute joint property axisLength, which are set explicitly in the code.

3.3.4 Commonly used joints

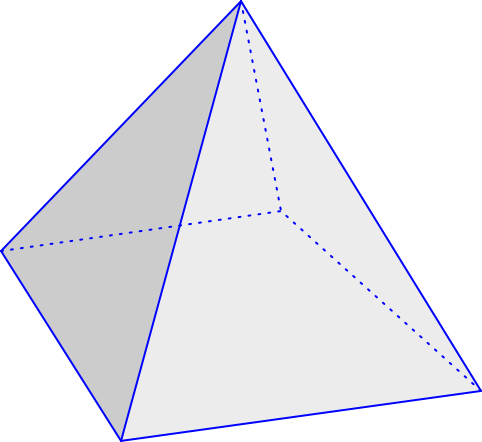

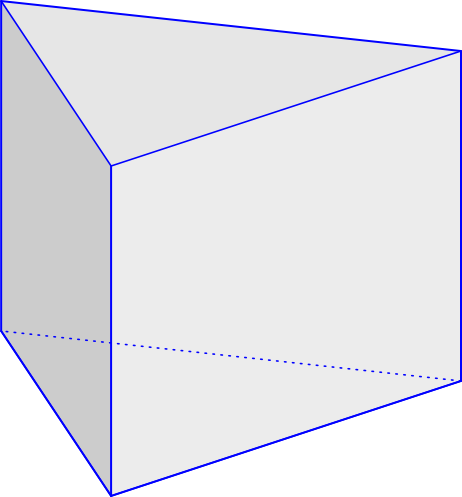

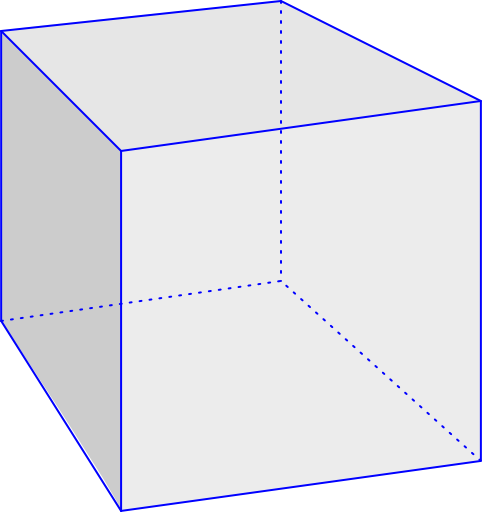

|

|

|

|

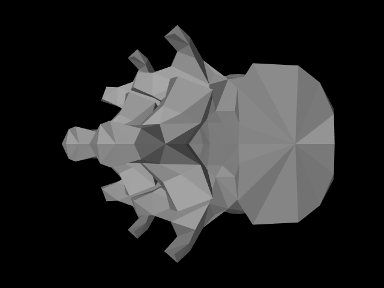

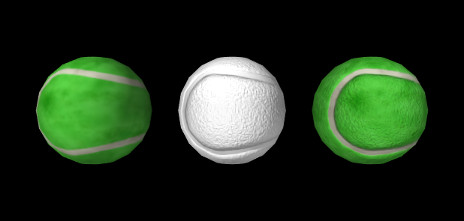

Some of the joints commonly used by ArtiSynth are shown in Figure 3.8. Each illustration shows the allowed joint motion relative to the base coordinate frame D. Clockwise from the top-left, these joints are:

- Revolute joint

-

A one DOF joint which allows rotation by an angle

about

the z axis.

about

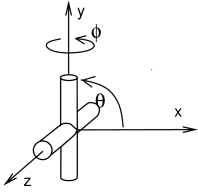

the z axis. - Roll-pitch joint

-

A two DOF joint, similar to the revolute joint, which allows the rotation about z to be followed by an additional rotation

about

the (new) y axis.

about

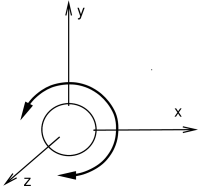

the (new) y axis. - Spherical joint

-

A three DOF joint in which the origin remains fixed but any orientation may be assumed.

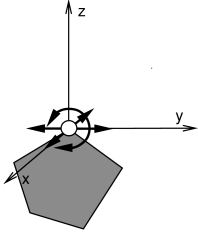

- Planar connector

-

A five DOF joint which connects a point on a single rigid body to a plane in space. The point may slide freely in the x-y plane, and the body may assume any orientation about the point.

3.4 Frame springs

Another way to connect two rigid bodies together is to use a frame spring, which is a six dimensional spring that generates restoring forces and moments between coordinate frames.

3.4.1 Frame spring coordinate frames

The basic idea of a frame spring is shown in Figure

3.9. It generates restoring forces and moments on

two frames C and D which are a function of ![]() and

and ![]() (the spatial velocity of frame D with respect to frame C).

(the spatial velocity of frame D with respect to frame C).

Decomposing forces into stiffness and damping terms, the force

![]() and moment

and moment ![]() acting on C can be expressed as

acting on C can be expressed as

| (3.15) |

where the translational and rotational forces ![]() ,

, ![]() ,

,

![]() , and

, and ![]() are general functions of

are general functions of ![]() and

and

![]() .

.

The forces acting on D are equal and opposite, so that

| (3.16) |

If frames C and D are attached to a pair of rigid bodies A and B, then

a frame spring can be used to connect them in a manner analogous to a

joint. As with joints, C and D generally do not coincide with the body

frames, and are instead offset from them by fixed transforms ![]() and

and ![]() (Figure 3.10).

(Figure 3.10).

3.4.2 Frame materials

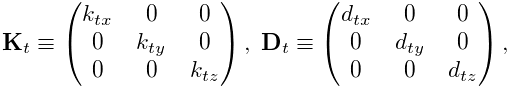

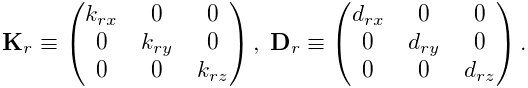

The restoring forces (3.15) generated in a frame spring depend on the frame material associated with the spring. Frame materials are defined in the package artisynth.core.materials, and are subclassed from FrameMaterial. The most basic type of material is a LinearFrameMaterial, in which the restoring forces are determined from

where ![]() gives the small angle approximation of the

rotational components of

gives the small angle approximation of the

rotational components of ![]() with respect to the

with respect to the ![]() ,

, ![]() , and

, and

![]() axes, and

axes, and

|

||

|

are the stiffness and damping matrices. The diagonal values defining

each matrix are stored in the 3-dimensional vectors ![]() ,

, ![]() ,

,

![]() , and

, and ![]() which are exposed as the stiffness, rotaryStiffness, damping, and rotaryDamping properties of

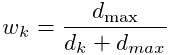

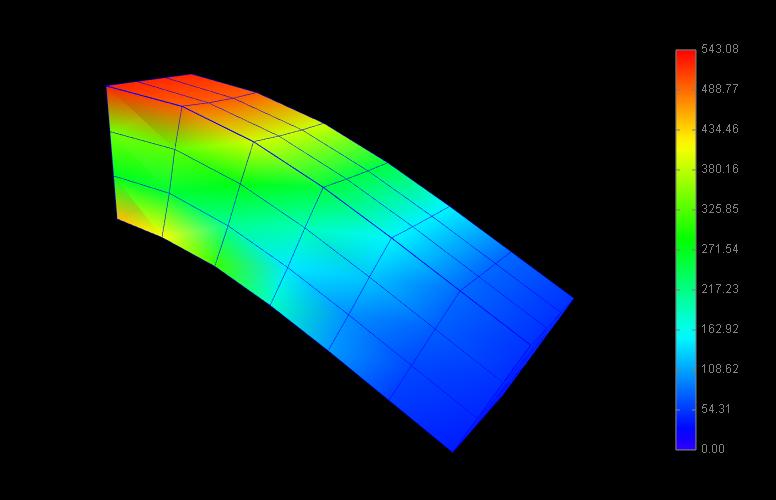

the material. Each of these specifies stiffness or damping values